Rapid microservices development with Signadot

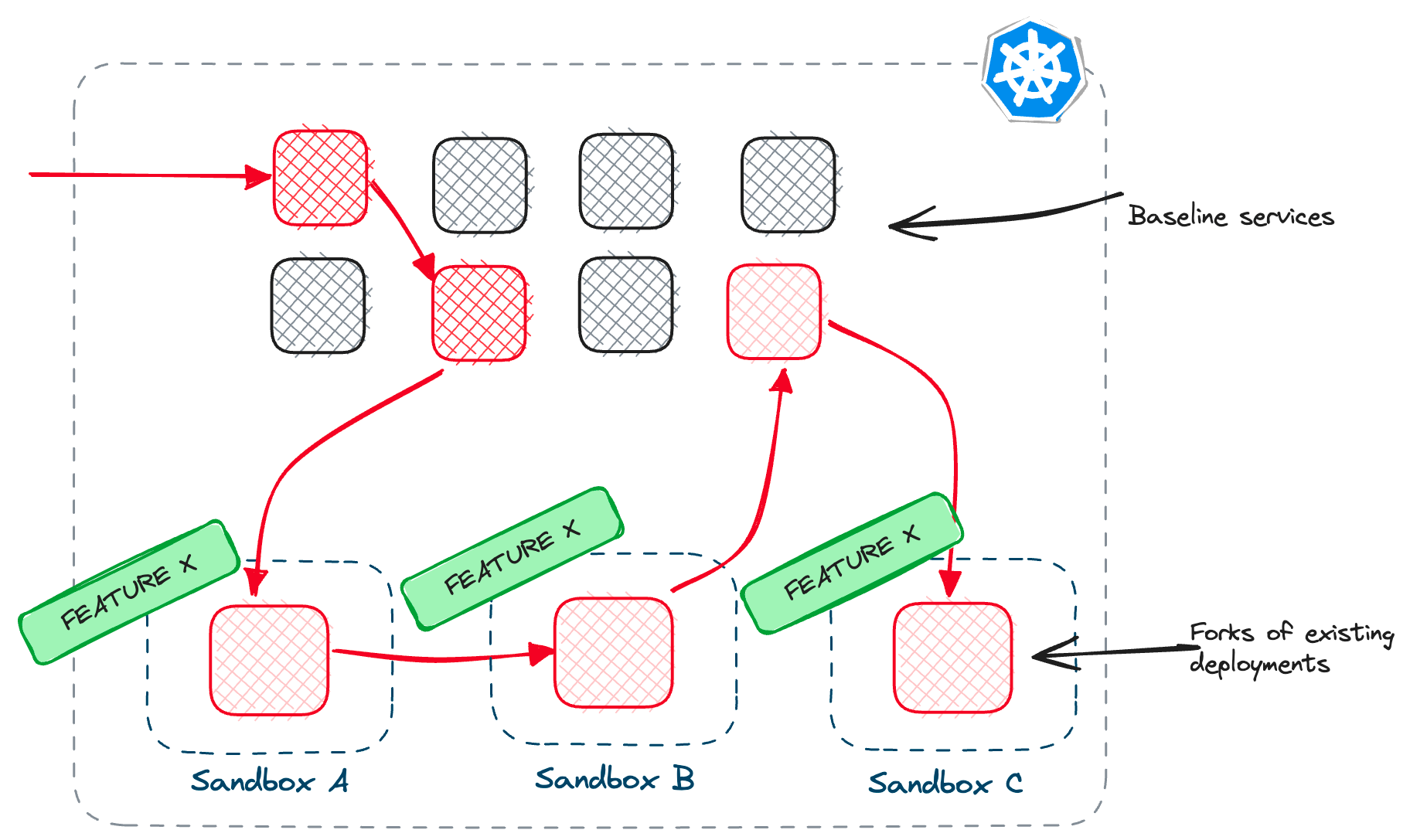

While developing microservices locally is possible, running and testing them in a production-like Kubernetes environment is complex. A typical development workflow while developing service in Kubernetes can significantly slow you down - from building a Docker image, pushing it, restarting the deployments, and testing the changes in a shared cluster. And all that, assuming you manage to keep the shared cluster up to date! In this article, I'll look at a tool called Signadot. Signadot introduces a concept of sandboxes that allow you to considerably shorten your developer workflow and go from minutes to mere seconds! The sandbox concept will enable you to build and run a service locally using the upstream and downstream dependencies inside a shared cluster.

Typical inner loop

- You write some code, make changes

- You build the code - this includes compiling and building your code and building the container image

- You push the container image to a registry (or load it into a local registry if using a local cluster)

- You update the Kubernetes Deployment or create a new one - this involves restarting or creating new pods

- You test the changes in the cluster with the rest of the services

Improved inner loop

- Make a code change in one service

- Build/run the application

- Test the change

Debugging with Istio

You might also like:

Debugging Kubernetes applications using Istio

Development workflow with Signadot

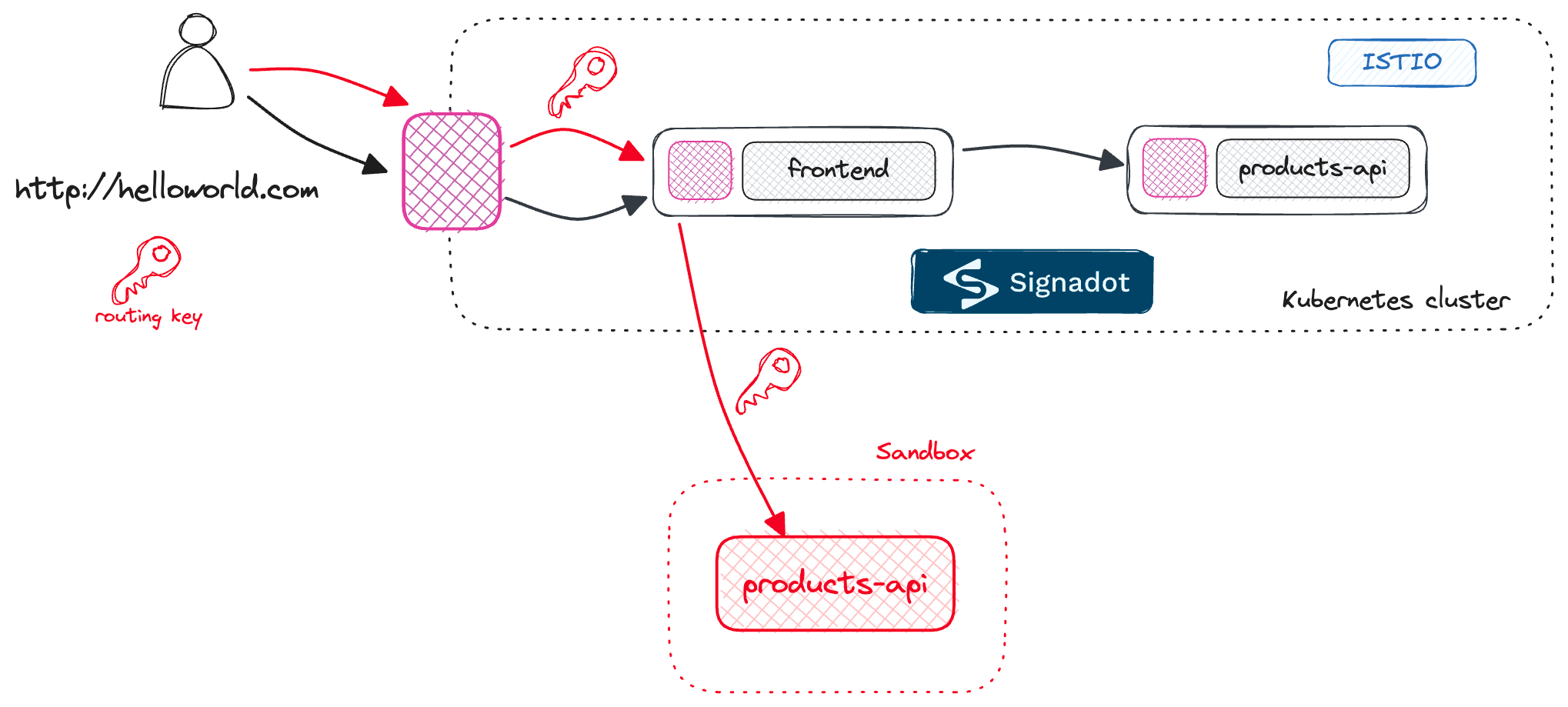

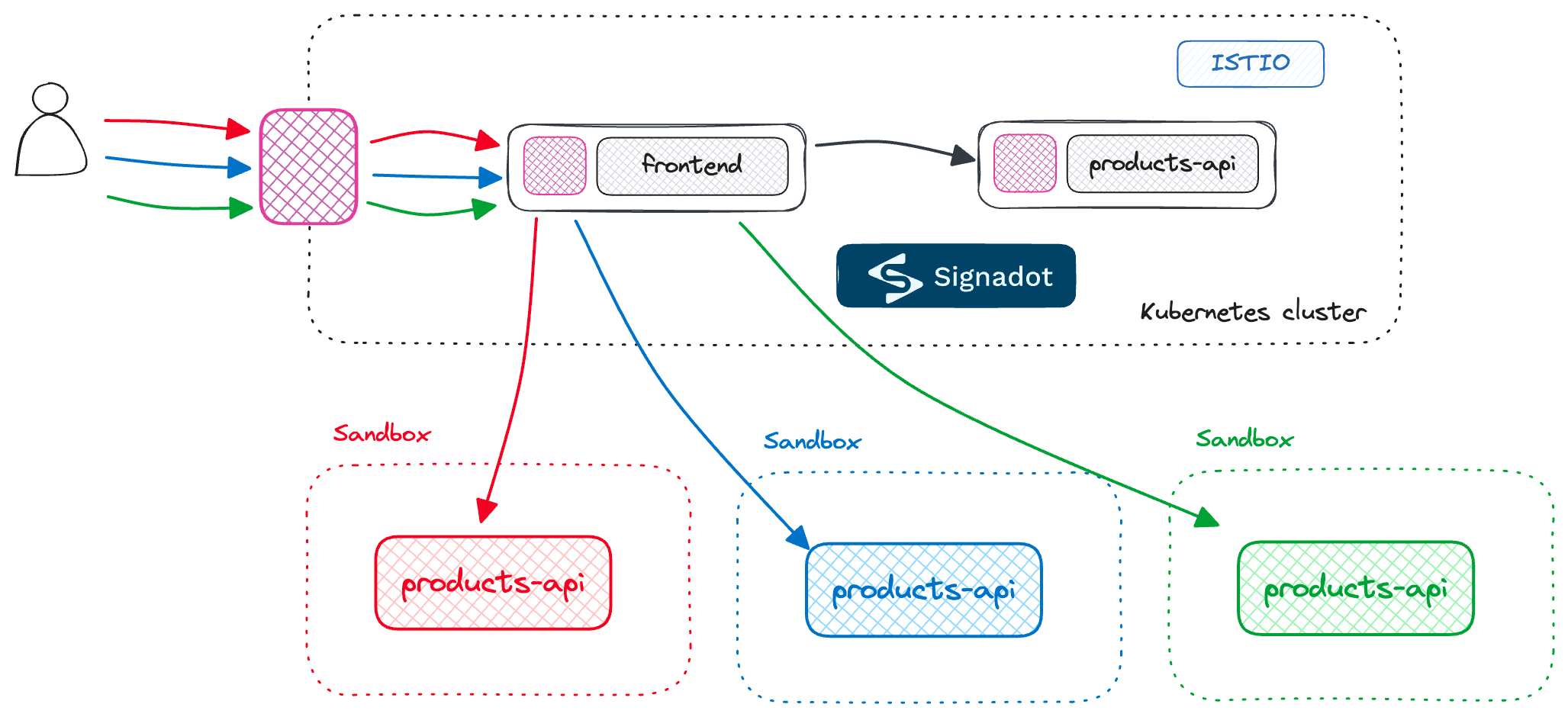

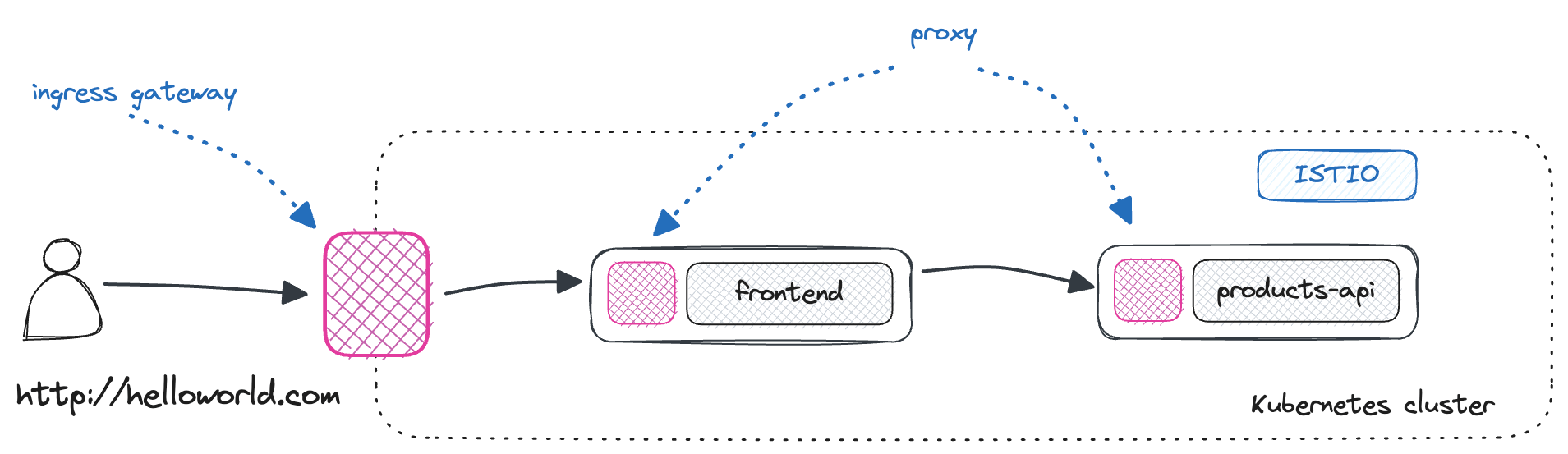

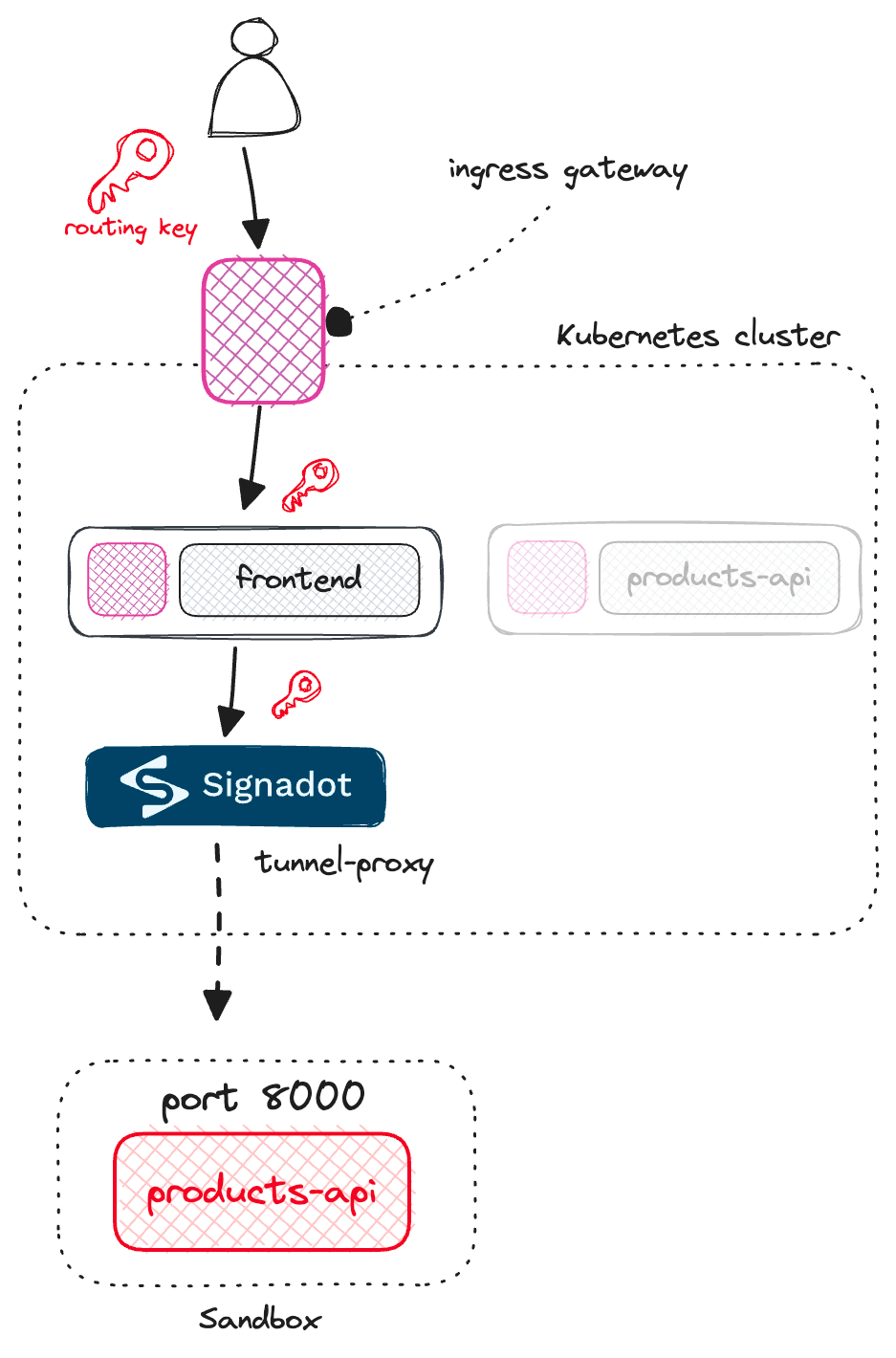

frontend and the products-api. However, if you include a routing key in your request, the request will go to the frontend and then to your instance of the products-api that's running locally.

Getting started with Signadot

default namespace with istio-injection=enabled, as we want Istio to inject sidecars into our deployments automatically.Note

Note that you can also configure Signadot to work without Istio by applying the following annotation to your deployments:sidecar.signadot.com/inject: true. This annotation tells Signadot to inject a sidecar into your deployment.

frontend application and the backend products-api service. The diagram below shows the application's architecture and our deployment.

frontend application.apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

labels:

app: frontend

spec:

replicas: 1

selector:

matchLabels:

app: frontend

template:

metadata:

labels:

app: frontend

spec:

containers:

- name: frontend

image: pj3677/frontend-app

env:

- name: PORT

value: '3000'

- name: BACKEND_SERVICE_URL

value: http://products.default.svc.cluster.local

ports:

- containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: frontend

spec:

selector:

app: frontend

ports:

- protocol: TCP

port: 80

targetPort: 3000

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: frontend

spec:

hosts:

- '*'

gateways:

- gateway

http:

- name: default

route:

- destination:

host: frontend.default.svc.cluster.local

port:

number: 80

---

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: gateway

spec:

selector:

app: istio-ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- '*'

products-api:apiVersion: apps/v1

kind: Deployment

metadata:

name: products

labels:

app: products

spec:

replicas: 1

selector:

matchLabels:

app: products

template:

metadata:

labels:

app: products

spec:

containers:

- name: products

image: pj3677/products-api

env:

- name: PORT

value: '8000'

ports:

- containerPort: 8000

---

apiVersion: v1

kind: Service

metadata:

name: products

spec:

selector:

app: products

ports:

- protocol: TCP

port: 80

targetPort: 8000

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: products

spec:

hosts:

- 'products'

http:

- name: default

route:

- destination:

host: products.default.svc.cluster.local

port:

number: 80

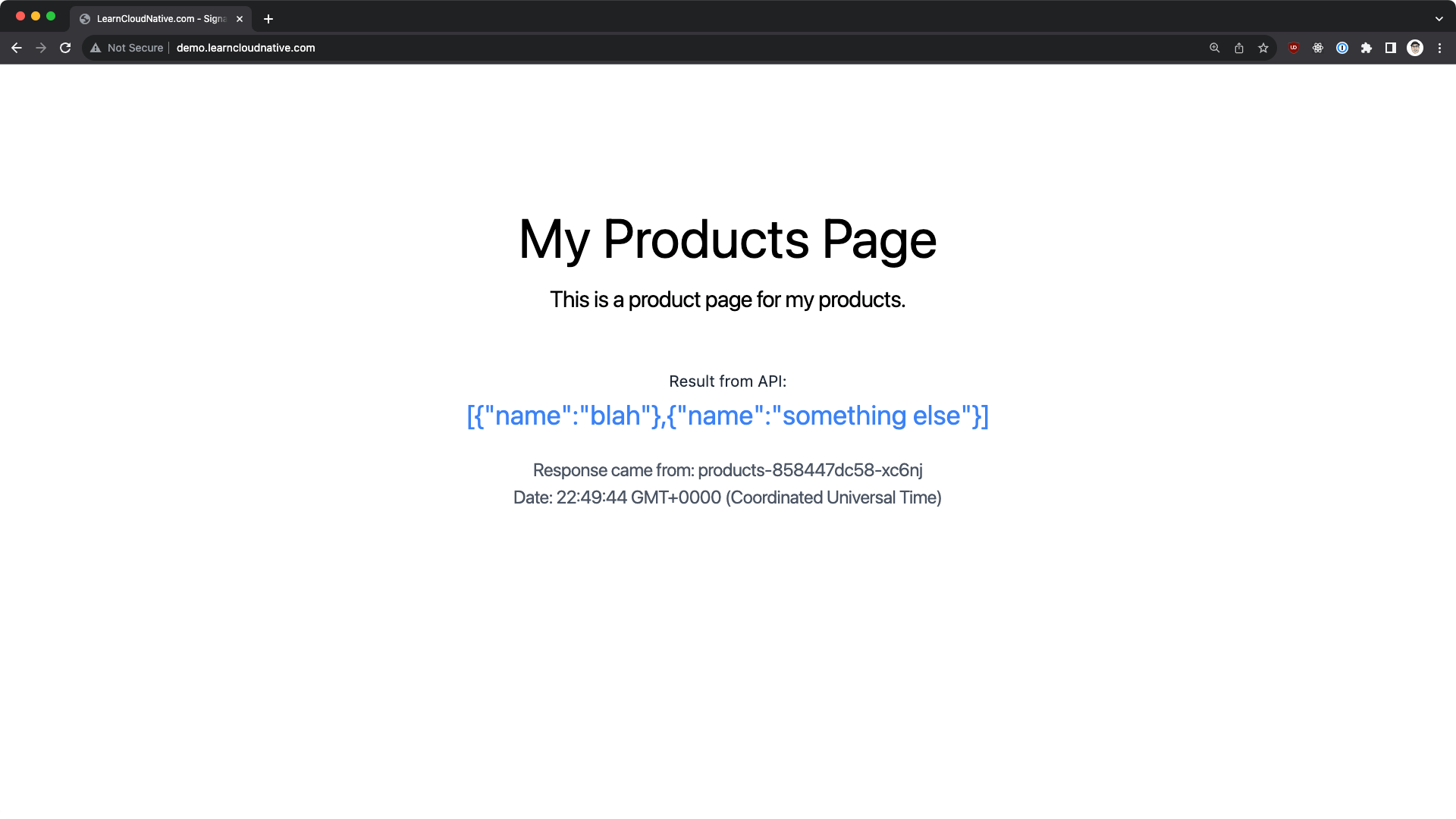

products service; this default route will also be important later on, as Signadot uses it to route traffic based on the routing keys.products-api and shows the results on the page.

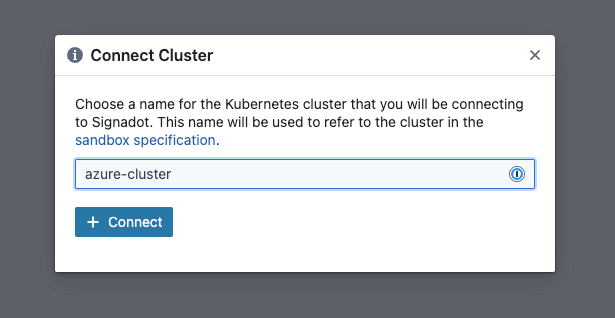

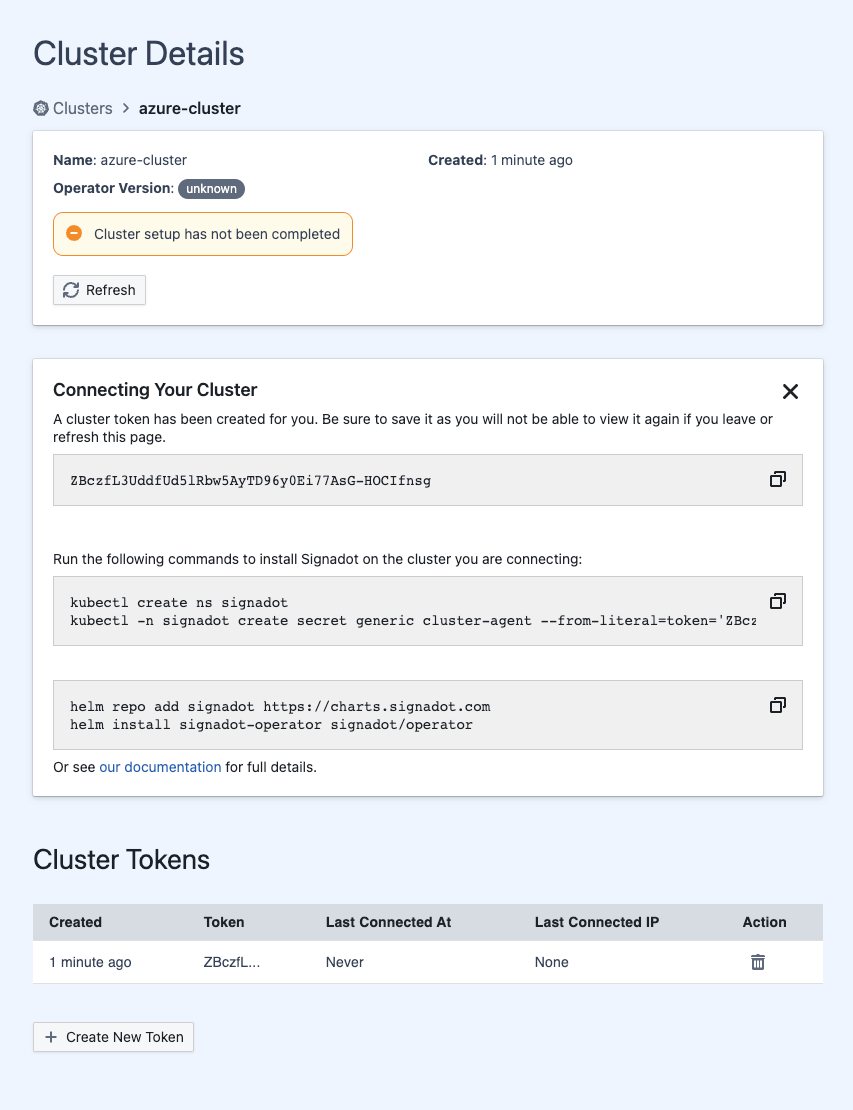

Installing Signadot and connecting a cluster

CLUSTER_TOKEN) and then create a secret with the token and install the Signadot operator:kubectl create ns signadot && \

kubectl -n signadot create secret generic cluster-agent --from-literal=token=$CLUSTER_TOKEN

# Install the Signadot operator

helm repo add signadot https://charts.signadot.com && \

helm install signadot-operator signadot/operator

kubectl get po -n signadot

NAME READY STATUS RESTARTS AGE

agent-68ccb9c85d-zxkpm 2/2 Running 0 82s

io-context-server-68fdf59c76-t6s94 1/1 Running 0 82s

routeserver-9cc96596-v5ldn 1/1 Running 0 82s

signadot-controller-manager-7f75686754-kz4sc 2/2 Running 0 81s

tunnel-api-6656bddfd8-r7jbb 1/1 Running 0 82s

tunnel-proxy-69bfcb8598-n7jm9 2/2 Running 0 82s

Signadot CLI and configuration

~/.signadot/config.yaml. The config file contains the API key to your Signadot account, which you can get from the Signadot Dashboard, and the connections to the shared cluster (baseline environment). The connection to the cluster is in the form of the cluster name and the kubeContext name.# Values from the settings page and the API keys page

org: <ORGANIZATION NAME>

api_key: <API KEY>

local:

connections:

# Name from the cluster details page

- cluster: <CLUSTER_NAME>

kubeContext: <KUBERNETES_CONTEXT_NAME>

type: PortForward

signadot cluster list

NAME CREATED OPERATOR VERSION

azure-cluster 2023-10-25T23:52:03Z 0.13.3

Connecting a cluster and creating a sandbox

local connect command:signadot local connect

Note

The connect command requires sudo permissions, so you'll be prompted for the password. Signadot has to update the/etc/hostsfile with cluster service names and configure the routing from your local machine to the cluster.

signadot local status:signadot local status

* runtime config: cluster azure-cluster, running with root-daemon

✓ Local connection healthy!

* port-forward listening at ":61890"

* localnet has been configured

* 20 hosts accessible via /etc/hosts

* Connected Sandboxes:

- No active sandbox

/etc/hosts file, and you'll see the entries Signadot created:...

10.0.247.174 frontend.default # DO NOT EDIT -- added by signadot

10.0.247.174 frontend.default.svc # DO NOT EDIT -- added by signadot

10.0.247.174 frontend.default.svc.cluster # DO NOT EDIT -- added by signadot

...

sandbox.yaml file to create the sandbox:name: '@{serviceName}-sandbox'

spec:

cluster: '@{cluster}'

description: '@{serviceName} local sandbox'

local:

- name: 'local-@{serviceName}'

from:

kind: Deployment

namespace: default

name: '@{serviceName}'

mappings:

- port: 80

toLocal: 'localhost:8000'

@{serviceName} and @{cluster} are placeholders that will replace the actual values when we create the sandbox.Note

In themappingssection, we're saying we want port80on the service to map tolocalhost:8000, where the service will run locally. That way, when a request with a specific routing key comes into our service, Signadot will jump in and route the request to our local service with the routing rules.

sandbox apply command, and provide the YAML file and the values for the placeholders:signadot sandbox apply -f sandbox.yaml --set cluster=azure-cluster --set serviceName=products

Created sandbox "products-sandbox" (routing key: rnkqjjzsy7hq0) in cluster "azure-cluster".

Waiting (up to --wait-timeout=3m0s) for sandbox to be ready...

✓ Sandbox status: Ready: All desired workloads are available.

Dashboard page: https://app.signadot.com/sandbox/id/rnkqjjzsy7hq0

The sandbox "products-sandbox" was applied and is ready.

products-api:kubectl get virtualservice products -o yaml

---

http:

- match:

- headers:

baggage:

regex: ^.*\b(sd-routing-key|sd-sandbox|sd-workspace)\s*=\s*rnkqjjzsy7hq0\b.*$

- headers:

tracestate:

regex: ^.*\b(sd-routing-key|sd-sandbox|sd-workspace)\s*=\s*rnkqjjzsy7hq0\b.*$

- headers:

uberctx-sd-routing-key:

exact: rnkqjjzsy7hq0

- headers:

uberctx-sd-sandbox:

exact: rnkqjjzsy7hq0

- headers:

uberctx-sd-workspace:

exact: rnkqjjzsy7hq0

- headers:

ot-baggage-sd-routing-key:

exact: rnkqjjzsy7hq0

- headers:

ot-baggage-sd-sandbox:

exact: rnkqjjzsy7hq0

- headers:

ot-baggage-sd-workspace:

exact: rnkqjjzsy7hq0

name: signadot-operator-products-sandbox-rnkqjjzsy7hq0-local-products

route:

- destination:

host: products-sandbox-rnkqjjzsy7hq0-local-products.static

port:

number: 45369

products-sandbox-rnkqjjzsy7hq0-local-products.static host.kubectl get serviceentry products-sandbox-rnkqjjzsy7hq0-local-products.static -o yaml

...

addresses:

- 10.244.0.18/32

endpoints:

- address: 10.244.0.18

hosts:

- products-sandbox-rnkqjjzsy7hq0-local-products.static

ports:

- name: "45369"

number: 45369

resolution: STATIC

...

tunnel-proxy that's running in the Signadot namespace. The tunnel-proxy is the one that's responsible for routing the traffic to the local service.kubectl get po -n signadot -o wide | grep 10.244.0.18:signadot tunnel-proxy-69bfcb8598-n7jm9 2/2 Running 0 24m 10.244.0.18 aks-agentpool-42059579-vmss000000 <none> <none>

Debugging locally with shared Kubernetes cluster

products-api service on port 8000 - the same port we specified in the sandbox YAML file.

frontend service running inside the cluster to a downstream dependency running on your laptop.

Preview environments

Resource plugins

Route groups