Understanding networking in Kubernetes

Explore an in-depth analysis of Kubernetes networking, including container-to-container, pod-to-pod, pod-to-service, ingress, and egress communication. Understand the key roles of Container Network Interface (CNI), kubelet, kube-proxy, and learn about iptables and IPVS modes in kube-proxy.

You might also like:

Securing Your Kubernetes Cluster: Cilium and Network PoliciesFour Key Networking Problems Solved by Kubernetes

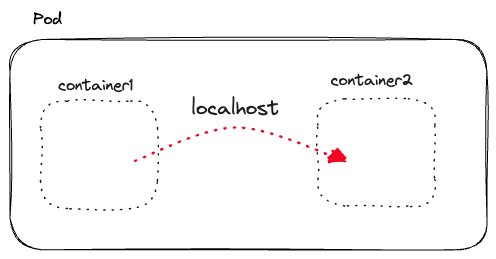

1. Container-to-container communication

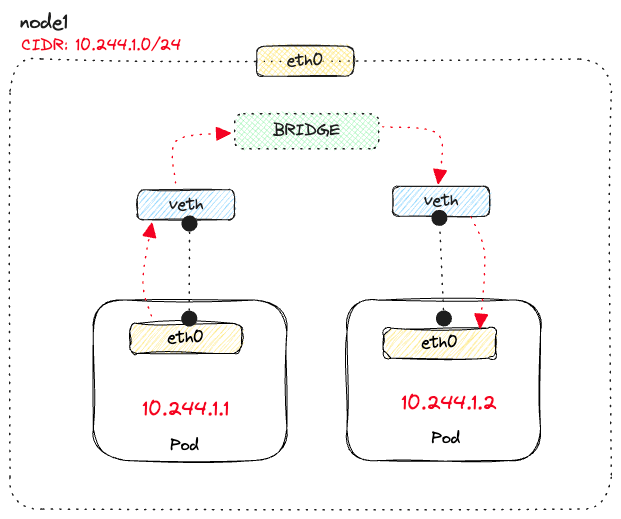

2. Pod-to-pod communication

Note

Classless Inter-Domain Routing (CIDR) is a method for allocating IP addresses and for IP routing. The Internet Engineering Task Force introduced CIDR in 1993 to replace the previous classful network addressing architecture on the Internet. Its goal was to slow the growth of routing tables on routers across the Internet, and to help slow the rapid exhaustion of IPv4 addresses.

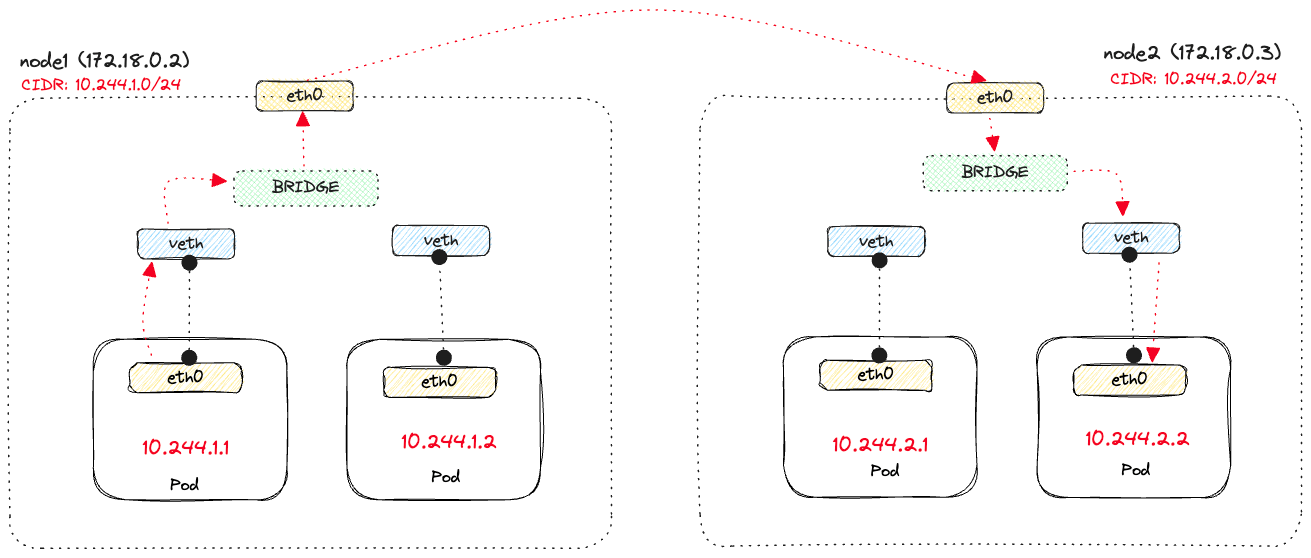

veth) connect the two veths across network namespaces. Each pod that runs on the node has a veth pair connecting the pod namespace to the nodes' network namespace. In this case, one virtual interface connects to the network namespace in the pod (eth0), and the other virtual interface connects to the root network namespace on the node.Note

eth0is a naming convention used in computer networking to refer to the first Ethernet network interface on a device. It is commonly used in Linux-based operating systems to identify the primary wired Ethernet interface.

eth0 interface in the pod to the veth interface on the node side, and then through the virtual bridge to another virtual interface that's connected to eth0 of the destination pod.

eth0 on node1 to eth0 on node2.kindnet that runs as a DaemonSet and is responsible for setting up the bridge between nodes and allows one pod to call another pod using an IP address.Note

DaemonSet resource in Kubernetes ensures that all nodes run a copy of a Pod.

node1 (IP 172.18.0.2) that defines, amongst other things, where the packets should be sent for pods running on node2 (IP 172.18.0.3).default via 172.18.0.1 dev eth0

10.244.0.0/24 via 172.18.0.4 dev eth0

10.244.2.0/24 via 172.18.0.3 dev eth0

10.244.1.1 dev veth2609878b scope host

10.244.1.1 dev veth23974a9d scope host

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.2

10.244.2.0/24 via 172.18.0.3 dev eth0 says that any packets destined to IP's in that CIDR range, which are the pods running on node2, should be sent to 172.18.0.3 via the eth0 interface. The diagram below shows the flow of sending a request from 10.244.1.1 on node1 to pod 10.244.2.2 on node2.

3. Pod-to-service communication

- How to assign an IP address to the service?

- How to route traffic sent to the service IP to the backing pod IPs?

- How to keep track of the pods that are backing the service?

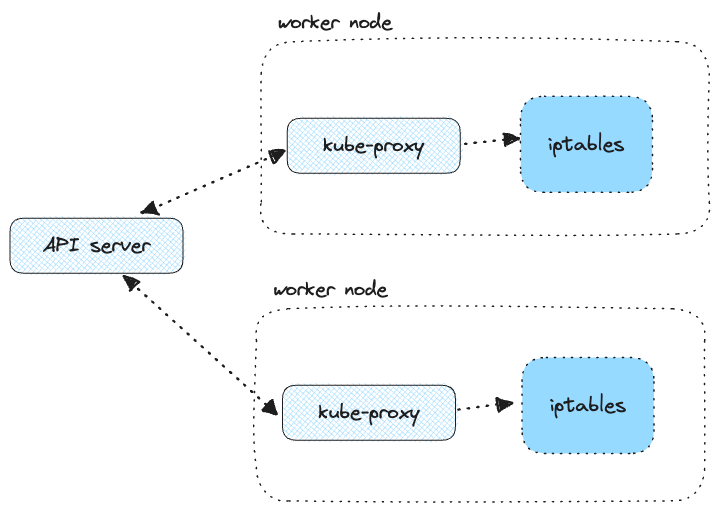

kube-proxy using iptables or IPVS (IP virtual server).Note

iptables is a user-space utility program that allows a system administrator to configure the IP packet filter rules of the Linux kernel firewall, implemented as different Netfilter modules. The filters are organized in different tables, which contain chains of rules for how to treat network traffic packets.

4. Ingress and egress communication

Egress communication (traffic existing the cluster)

iptables ensure the source IP of the pod gets modified to the internal IP address of the node (VM). Typically, when running a cloud-hosted cluster, the nodes have private IPs and run inside a virtual private cloud network (VPC).Ingress communication (traffic entering the cluster)

Unpacking the Role of Kubelet in Kubernetes

ADD command on the CNI. A call to the ADD command tells the CNI to create the pod network.Role of kube-proxy

- iptables

- ipvs

- userspace

- kernelspace

Note

Allkube-proxymodes rely on iptables.

iptables. The web server is responsible for proxying to the pods. You shouldn't use this mode anymore.userspace mode for k8s on Windows.How iptables kube-proxy mode works?

kind-iptables.yaml file:kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

- role: worker

kind create cluster --config=kind-iptables.yaml

httpbin and scale it up to 3 replicas:kubectl apply -f https://raw.githubusercontent.com/istio/istio/master/samples/httpbin/httpbin.yaml

kubectl scale deployment httpbin -n default --replicas=3

httpbin workload (3 replicas) and a corresponding Kubernetes service. Let's look at the iptables rules that get set up on one of the cluster nodes:Note

Since we're usingkind, we can look at the Docker container (representing our node) and then usedocker execto run the iptables command inside that node.

iptables -L -t nat > iptables.txt

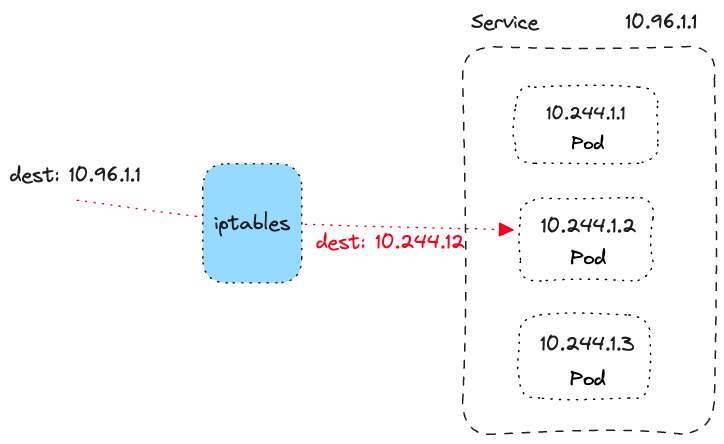

PREROUTING chain, the traffic gets routed to the KUBE-SERVICES chain. The KUBE-SERVICES chain contains rules for each service in the cluster. For example:Chain KUBE-SERVICES (2 references)

target prot opt source destination

KUBE-SVC-FREKB6WNWYJLKTHC tcp -- anywhere 10.96.120.233 /* default/httpbin:http cluster IP */ tcp dpt:8000

KUBE-SVC-NPX46M4PTMTKRN6Y tcp -- anywhere 10.96.0.1 /* default/kubernetes:https cluster IP */ tcp dpt:https

KUBE-SVC-ERIFXISQEP7F7OF4 tcp -- anywhere 10.96.0.10 /* kube-system/kube-dns:dns-tcp cluster IP */ tcp dpt:domain

KUBE-SVC-JD5MR3NA4I4DYORP tcp -- anywhere 10.96.0.10 /* kube-system/kube-dns:metrics cluster IP */ tcp dpt:9153

KUBE-SVC-TCOU7JCQXEZGVUNU udp -- anywhere 10.96.0.10 /* kube-system/kube-dns:dns cluster IP */ udp dpt:domain

KUBE-NODEPORTS all -- anywhere anywhere /* kubernetes service nodeports; NOTE: this must be the last rule in this chain */ ADDRTYPE match dst-type LOCAL

10.96.120.233 is the IP address of the Kubernetes service (in this case, httpbin the default namespace). The last portion, tcp dpt:8000 specifies that this rule applies to TCP traffic destined for port 8000.httpbin.default service must be processed by the KUBE-SVC-FREKB6WNWYJLKTHC chain.Chain KUBE-SVC-FREKB6WNWYJLKTHC (1 references)

target prot opt source destination

KUBE-MARK-MASQ tcp -- !10.244.0.0/16 10.96.120.233 /* default/httpbin:http cluster IP */ tcp dpt:8000

KUBE-SEP-PFKDKMHHACIMKSSX all -- anywhere anywhere /* default/httpbin:http -> 10.244.1.2:80 */ statistic mode random probability 0.25000000000

KUBE-SEP-Q7UD3MC3WPZFKDWM all -- anywhere anywhere /* default/httpbin:http -> 10.244.1.3:80 */ statistic mode random probability 0.33333333349

KUBE-SEP-66R3JWVSZK6BYGSL all -- anywhere anywhere /* default/httpbin:http -> 10.244.2.2:80 */ statistic mode random probability 0.50000000000

KUBE-SEP-XIWLPFJKMVHRQV3W all -- anywhere anywhere /* default/httpbin:http -> 10.244.2.3:80 */

10.244.0.0/16 source subnet (i.e., they aren't originating from within the cluster) going to the httpbin service, for masquerading (KUBE-MARK-MASQ chain). The KUBE-MARK-MASQ chain is responsible for marking packets that need to be masqueraded - the chain marks all packets with 0x4000:Chain KUBE-MARK-MASQ (16 references)

target prot opt source destination

MARK all -- anywhere anywhere MARK or 0x4000

Note

What is masquerading? Masquerading is a form of SNAT (Source Network Address Translation) used when the source address for the outbound packets should be changed to the address of the outgoing networking interface. For example, when the packets leave the node, we should use an external IP instead of an internal one.

KUBE-SVC chain correspond to the pods backing the service. For example, here's one of the chains for the httpbin pods:Chain KUBE-SEP-PFKDKMHHACIMKSSX (1 references)

target prot opt source destination

KUBE-MARK-MASQ all -- 10.244.1.2 anywhere /* default/httpbin:http */

DNAT tcp -- anywhere anywhere /* default/httpbin:http */ tcp to:10.244.1.2:80

KUBE-MARK-MASQ rule applies to packets going out of the pod (source 10.244.1.2) and marks the outgoing packets with 0x4000.httpbin service) to the IP address of the pod (tcp to:10.244.1.2:80). This is the rule that redirects to the actual pod IP.How IPVS kube-proxy mode works?

ipvs mode:kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

networking:

kubeProxyMode: 'ipvs'

nodes:

- role: control-plane

- role: worker

- role: worker

kind create cluster --config=kind-ipvs.yaml

httpbin and scale it up to 3 replicas:kubectl apply -f https://raw.githubusercontent.com/istio/istio/master/samples/httpbin/httpbin.yaml

kubectl scale deployment httpbin -n default --replicas=3

Note

Ensure you runkubectl get pods -o wideand note the Kubernetes node where thehttpbinpods are running. Then, you can rundocker psto get the ID of that same node (or use the node name) and usedocker execto run theiptablescommand on that node.

iptables -L -t nat > iptables-ipvs.txt

KUBE-SERVICES chain looks like:Chain KUBE-SERVICES (2 references)

target prot opt source destination

KUBE-MARK-MASQ all -- !10.244.0.0/16 anywhere /* Kubernetes service cluster ip + port for masquerade purpose */ match-set KUBE-CLUSTER-IP dst,dst

KUBE-NODE-PORT all -- anywhere anywhere ADDRTYPE match dst-type LOCAL

ACCEPT all -- anywhere anywhere match-set KUBE-CLUSTER-IP dst,dst

KUBE-CLUSTER-IP IP set.KUBE-CLUSTER-IP contains the Kubernetes service IP addresses. We can use the ipset tool to list the contents of the set:apt-get update -y

apt-install -y ipset

ipset list KUBE-CLUSTER-IP

Name: KUBE-CLUSTER-IP

Type: hash:ip,port

Revision: 6

Header: family inet hashsize 1024 maxelem 65536 bucketsize 12 initval 0xa3af5d2d

Size in memory: 440

References: 2

Number of entries: 5

Members:

10.96.0.10,tcp:9153

10.96.0.10,udp:53

10.96.0.1,tcp:443

10.96.0.10,tcp:53

10.96.54.116,tcp:8000

httpbin Kubernetes service. While IPs were stored inside the iptables rules in the previous mode when using ipvs, the IPs are stored in the ipset, and the iptables rules now only reference the ipset. Storing IPs outside allows us to only modify the ipsets, instead of traversing the iptables chains and modifying the rules.ipvsadm tool so that we can look at the configuration of the IPVS proxy:apt-get install -y ipvsadm

ipvsadm -L -n we get the following output:IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 172.18.0.4:6443 Masq 1 0 0

TCP 10.96.0.10:53 rr

-> 10.244.0.2:53 Masq 1 0 0

-> 10.244.0.4:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.0.2:9153 Masq 1 0 0

-> 10.244.0.4:9153 Masq 1 0 0

TCP 10.96.54.116:8000 rr

-> 10.244.1.2:80 Masq 1 0 0

-> 10.244.1.3:80 Masq 1 0 0

-> 10.244.2.2:80 Masq 1 0 0

-> 10.244.2.3:80 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.0.2:53 Masq 1 0 0

-> 10.244.0.4:53 Masq 1 0 0

10.96.54.116:8000 corresponding to the httpbin service, and the lines that follow are the IP addresses of the backing pods.rr in the output stands for round-robin. In contrast, iptables can't do load balancing, and they uses probabilities to distribute the traffic.Frequently asked questions

1. What is a Container Network Interface (CNI)?

2. What are the four networking problems Kubernetes aims to solve?

3. How does container-to-container communication work in Kubernetes?

localhost.4. How is pod-to-pod communication achieved in Kubernetes?

5. What role does the CNI play in pod-to-pod communication?

6. What is kube-proxy and what is its role in Kubernetes?

kube-proxy is a network proxy that runs on each node in a Kubernetes cluster. It keeps the services and their backing endpoints (pod IPs) up-to-date and manages the routing rules on the nodes.7. How does Kubernetes handle ingress and egress communication?

iptables to change the source IP of the pod to the internal IP address of the node (VM). For ingress communication, a Kubernetes LoadBalancer service provides an external IP address, allowing traffic from the internet to reach the cluster.8. What is kubelet and what role does it play in Kubernetes?

Kubelet is a component that runs on every node in a Kubernetes cluster. It manages the pods scheduled on the node, provides status updates about the node and pods, and manages container networking implementation through the CNI.9. What is the difference between iptables and IPVS modes in kube-proxy?

kube-proxy. The iptables mode, which is the default, relies solely on iptables for traffic routing and can become slow with many rules. IPVS, on the other hand, supports multiple load balancing modes, making it a more performant alternative.