How to configure rate limiter in Istio

In this article you'll learn how rate limiting works in Envoy and how to configure a local rate limiter on the worloads inside the mesh, as well as at the edge of the mesh for the ingress and ingress gateways.

What is rate limiting?

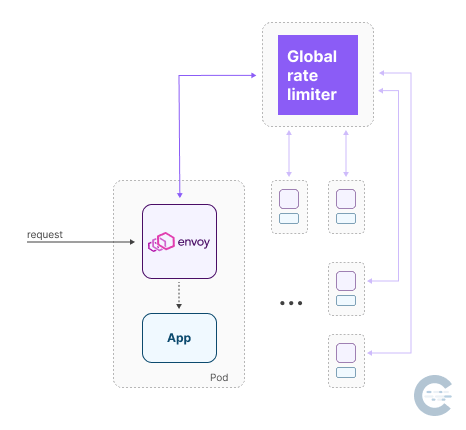

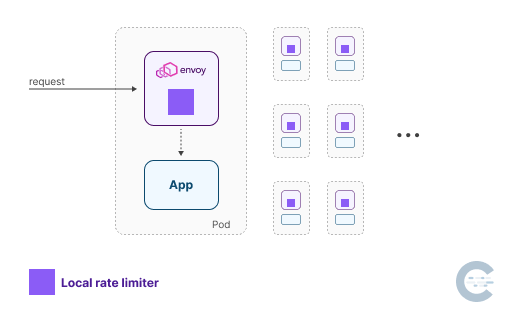

Local vs. global rate limiting

- global (or distributed) rate limiting

- local rate limiting

Note

The typical use case for the global rate limiter is when many hosts send requests to a smaller number of services. In this case, the numerous hosts can overwhelm the services, and the global rate limiter can help to prevent cascading failures. For example, we can configure a global rate limiter at the ingress gateway and control the total number of requests entering the mesh. Then, once those requests are inside the mesh, we can use local rate limiters to manage the number of requests sent to specific services.

How does the local rate limiter work?

- the maximum number of tokens the bucket can hold

- the rate at which the bucket gets filled (fill interval)

- the number of tokens that get added to the bucket with each fill interval

x-envoy-ratelimited response header. However, we can configure the rate limiter to return a custom HTTP status code and configure additional response headers.Local rate limiter example

demo profile to get the egress gateway installed as well. I've also installed Prometheus, Grafana, and Zipkin.istioctl install --set profile=demo -y

$ istioctl version

client version: 1.15.0

control plane version: 1.15.0

data plane version: 1.15.0 (2 proxies)

$ kubectl get po -n istio-system

NAME READY STATUS RESTARTS AGE

istio-egressgateway-6854f6dc6f-gz6v6 1/1 Running 0 66s

istio-ingressgateway-7c7c7b5bf9-nppw8 1/1 Running 0 66s

istiod-857cb8c78d-gnph2 1/1 Running 0 82s

httpbin application and then use a curl Pod inside the cluster to test things out. Before deploying, make sure you add the istio-injection=enabled label to the namespace!$ kubectl get ns --show-labels

NAME STATUS AGE LABELS

default Active 15m istio-injection=enabled,kubernetes.io/metadata.name=default

istio-system Active 4m28s kubernetes.io/metadata.name=istio-system

kube-node-lease Active 15m kubernetes.io/metadata.name=kube-node-lease

kube-public Active 15m kubernetes.io/metadata.name=kube-public

kube-system Active 15m kubernetes.io/metadata.name=kube-system

$ kubectl apply -f https://raw.githubusercontent.com/istio/istio/master/samples/httpbin/httpbin.yaml

serviceaccount/httpbin created

service/httpbin created

deployment.apps/httpbin created

$ kubectl get po

NAME READY STATUS RESTARTS AGE

httpbin-74fb669cc6-q4jhj 2/2 Running 0 79s

name: envoy.filters.http.local_ratelimit

typed_config:

'@type': type.googleapis.com/udpa.type.v1.TypedStruct

type_url: type.googleapis.com/envoy.extensions.filters.http.local_ratelimit.v3.LocalRateLimit

value:

stat_prefix: http_local_rate_limiter

enable_x_ratelimit_headers: DRAFT_VERSION_03

token_bucket:

max_tokens: 50

tokens_per_fill: 10

fill_interval: 120s

filter_enabled:

runtime_key: local_rate_limit_enabled

default_value:

numerator: 100

denominator: HUNDRED

filter_enforced:

runtime_key: local_rate_limit_enforced

default_value:

numerator: 100

denominator: HUNDRED

max_tokens), and a fill rate of 10 tokens (tokens_per_fill) every 2 minutes (fill_interval). We're also enabling the rate limiter (filter_enabled) for 100% of the requests and enforcing (filter_enforced) it for 100% of the requests. We are also setting a stat_prefix which is used to generate metrics. The enable_x_ratelimit_headers option is used to enable the sending of the response headers that show the rate limit status. By default, the headers are disabled.httpbin workload might look like:apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: httpbin-ratelimit

namespace: istio-system

spec:

workloadSelector:

labels:

app: httpbin

version: v1

configPatches:

- applyTo: HTTP_FILTER

match:

context: SIDECAR_INBOUND

listener:

filterChain:

filter:

name: 'envoy.filters.network.http_connection_manager'

patch:

operation: INSERT_BEFORE

value:

name: envoy.filters.http.local_ratelimit

typed_config:

'@type': type.googleapis.com/udpa.type.v1.TypedStruct

type_url: type.googleapis.com/envoy.extensions.filters.http.local_ratelimit.v3.LocalRateLimit

value:

stat_prefix: http_local_rate_limiter

enable_x_ratelimit_headers: DRAFT_VERSION_03

token_bucket:

max_tokens: 50

tokens_per_fill: 10

fill_interval: 120s

filter_enabled:

runtime_key: local_rate_limit_enabled

default_value:

numerator: 100

denominator: HUNDRED

filter_enforced:

runtime_key: local_rate_limit_enforced

default_value:

numerator: 100

denominator: HUNDRED

Note

We're deploying the EnvoyFilter in theistio-system, global, namespace. This means the EnvoyFilter will be applied to any workloads in the mesh that match the workload selector.

- Workload selector (

workloadSelector)Workload selector used to select the workload to which the EnvoyFilter should be applied. In this case, we're choosing thehttpbinworkload by the app and version labels. - Apply to (

applyTo) & match sections (match)TheapplyTosection tells Istio where we want to apply the patch to. We're using theHTTP_FILTERvalue, specifying theSIDECAR_INBOUNDcontext (i.e., only apply the configuration changes for the inbound requests to the sidecar), and specifying a match on the specific filter (http_connection_manager). These lines will "select" thehttp_filterssection in the Envoy configuration and allow us to either apply a patch to an existing filter or insert a new one. - The patch (

patch)Thepatchsection is where we specify the actual configuration changes. We're using theINSERT_BEFOREoperation to insert the rate limiter filter at the top of the HTTP filter list.

...

"http_filters": [

{

"name": "envoy.filters.http.local_ratelimit",

"typed_config": {

"@type": "type.googleapis.com/udpa.type.v1.TypedStruct",

"type_url": "type.googleapis.com/envoy.extensions.filters.http.local_ratelimit.v3.LocalRateLimit",

"value": {

"stat_prefix": "http_local_rate_limiter",

"enable_x_ratelimit_headers": "DRAFT_VERSION_03",

"token_bucket": {

"max_tokens": 50,

"tokens_per_fill": 10,

"fill_interval": "120s"

},

"filter_enabled": {

"default_value": {

"numerator": 100

},

"runtime_key": "local_rate_limit_enabled"

},

"filter_enforced": {

"default_value": {

"numerator": 100

},

"runtime_key": "local_rate_limit_enforced"

}

}

}

},

{

"name": "istio.metadata_exchange",

"typed_config": {

...

]

Note

You can useistioctl dash envoy deploy/httpbinto see the Envoy configuration for a specific workload.

httpbin service and try to trigger the rate limiter. I've created another Pod inside the cluster that I am using to send the request.httpbin service:while true; do curl http://httpbin.default.svc.cluster.local:8000/headers; done

local_rate_limited responses and the HTTP 429. You can press CTRL+C to stop sending requests.$ curl -v httpbin:8000/headers

> GET /headers HTTP/1.1

> User-Agent: curl/7.35.0

> Host: httpbin:8000

> Accept: */*

>

< HTTP/1.1 429 Too Many Requests

< content-length: 18

< content-type: text/plain

< x-ratelimit-limit: 50

< x-ratelimit-remaining: 0

< x-ratelimit-reset: 96

< date: Sun, 04 Sep 2022 22:46:50 GMT

< server: envoy

< x-envoy-upstream-service-time: 1

<

local_rate_limited

x-ratelimit-* headers are added by the rate limiter filter, and they show the original limit, remaining tokens, and the time until the next refill (reset)fill_interval we've configured) you'll notice that the rate limiter will allow you to send more requests.x-ratelimit-* headers.Note

The HTTP status codes are represented as an enum in Envoy, so we can't just put a number there. We need to use the enum name.

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: httpbin-ratelimit

namespace: istio-system

spec:

workloadSelector:

labels:

app: httpbin

version: v1

configPatches:

- applyTo: HTTP_FILTER

match:

context: SIDECAR_INBOUND

listener:

filterChain:

filter:

name: 'envoy.filters.network.http_connection_manager'

patch:

operation: INSERT_BEFORE

value:

name: envoy.filters.http.local_ratelimit

typed_config:

'@type': type.googleapis.com/udpa.type.v1.TypedStruct

type_url: type.googleapis.com/envoy.extensions.filters.http.local_ratelimit.v3.LocalRateLimit

value:

stat_prefix: http_local_rate_limiter

token_bucket:

max_tokens: 50

tokens_per_fill: 10

fill_interval: 120s

filter_enabled:

runtime_key: local_rate_limit_enabled

default_value:

numerator: 100

denominator: HUNDRED

filter_enforced:

runtime_key: local_rate_limit_enforced

default_value:

numerator: 100

denominator: HUNDRED

response_headers_to_add:

- append_action: APPEND_IF_EXISTS_OR_ADD

header:

key: x-rate-limited

value: TOO_MANY_REQUESTS

status:

code: BadRequest

$ curl -v httpbin:8000/headers

> GET /headers HTTP/1.1

> User-Agent: curl/7.35.0

> Host: httpbin:8000

> Accept: */*

>

< HTTP/1.1 400 Bad Request

< x-rate-limited: TOO_MANY_REQUESTS

< content-length: 18

< content-type: text/plain

< date: Sun, 04 Sep 2022 23:03:26 GMT

< server: envoy

< x-envoy-upstream-service-time: 5

<

local_rate_limited

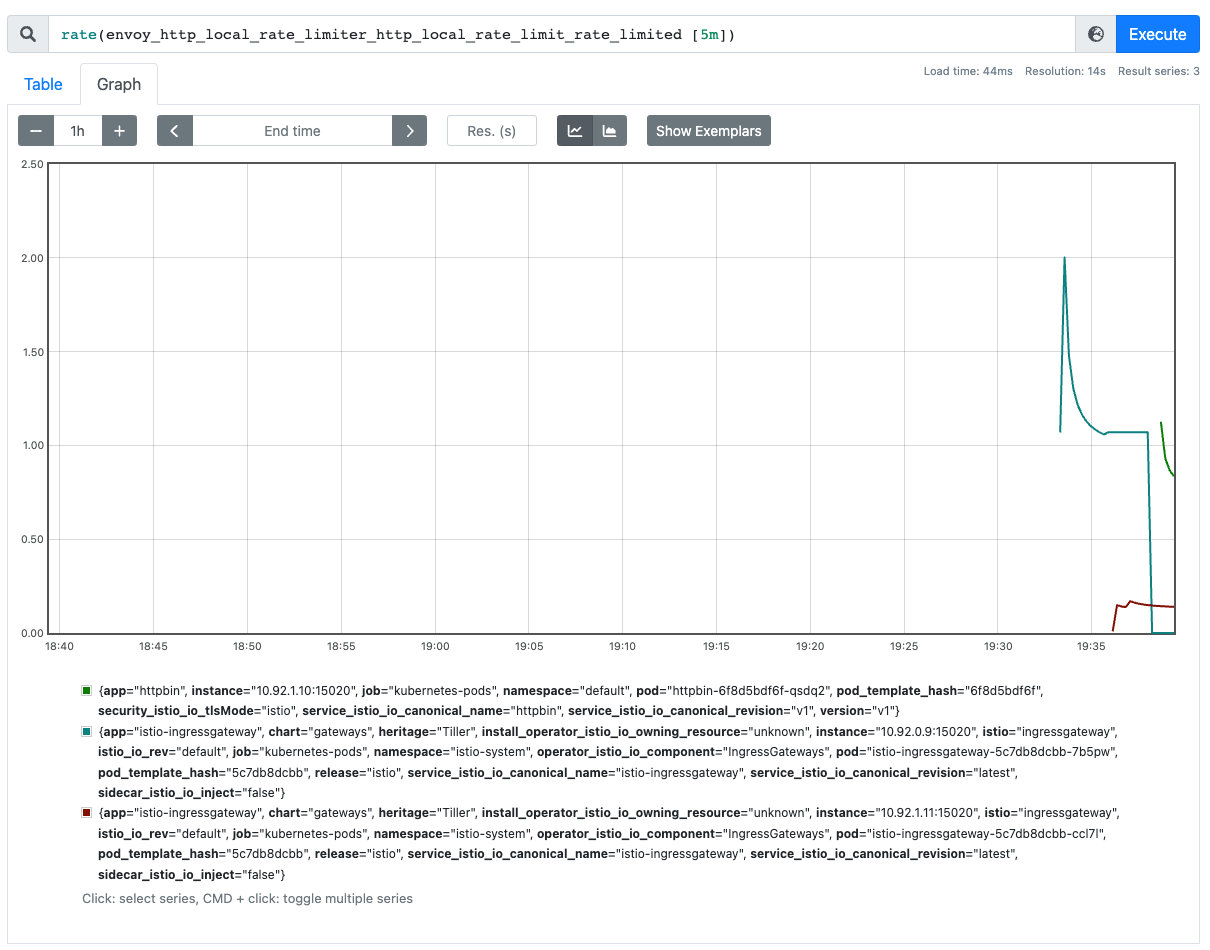

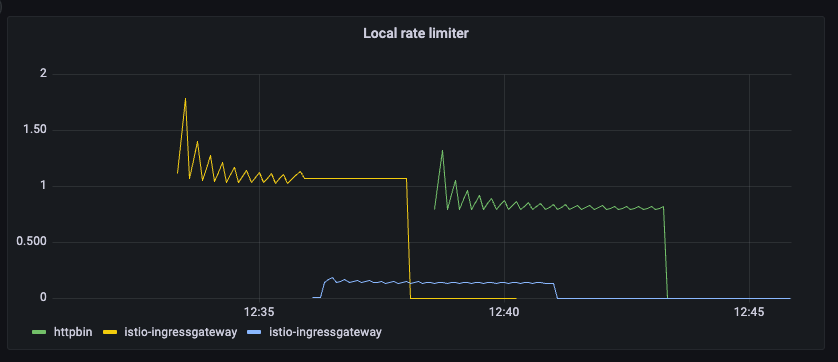

Rate limiter metrics

| Metric | Description |

|---|---|

<stat_prefix>.http_local_rate_limit.enabled | Total number of requests for which the rate limiter was consulted |

<stat_prefix>.http_local_rate_limit.ok | Total number of under limiter responses from the token bucket |

<stat_prefix>.http_local_rate_limit.rate_limited | Total number of responses without a token (but not necessarily enforced) |

<stat_prefix>.http_local_rate_limit.enforced | Total number of rate limited responses (e.g., when 429 is returned) |

<stat_prefix>.http_local_rate_limit, where <stat_prefix> is the value we've configured in the stat_prefix field (e.g. http_local_rate_limiter).Note

You might wonder why we use a generichttp_local_rate_limitername for thestat_prefixfield. The reason is that it allows us to use the same metric name and then use attributes such asapporversionto differentiate between rate limiters on different workloads.

httpbin deployment (the Pod spec template) to tell Istio to enable collection of the http_local_rate_limit statistics from Envoy:template:

metadata:

annotations:

proxy.istio.io/config: |-

proxyStatsMatcher:

inclusionRegexps:

- ".*http_local_rate_limit.*"

kubectl patch to do it:kubectl patch deployment httpbin --type merge -p '{"spec":{"template":{"metadata":{"annotations":{"proxy.istio.io/config":"proxyStatsMatcher:\n inclusionRegexps:\n - \".*http_local_rate_limit.*\""}}}}}'

httpbin service, we'll see the following metrics from the Envoy sidecar:# TYPE envoy_http_local_rate_limiter_http_local_rate_limit_enabled counter

envoy_http_local_rate_limiter_http_local_rate_limit_enabled{} 179

# TYPE envoy_http_local_rate_limiter_http_local_rate_limit_enforced counter

envoy_http_local_rate_limiter_http_local_rate_limit_enforced{} 129

# TYPE envoy_http_local_rate_limiter_http_local_rate_limit_ok counter

envoy_http_local_rate_limiter_http_local_rate_limit_ok{} 50

# TYPE envoy_http_local_rate_limiter_http_local_rate_limit_rate_limited counter

envoy_http_local_rate_limiter_http_local_rate_limit_rate_limited{} 129

Rate limiting at the ingress gateway

istio-ingressgateway workload instead. Because there's a differentiation between the configuration for sidecars and gateways, we need to use a different context in the EnvoyFilter resource (GATEWAY).apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: ingress-ratelimit

namespace: istio-system

spec:

workloadSelector:

labels:

istio: ingressgateway

configPatches:

- applyTo: HTTP_FILTER

match:

context: GATEWAY

listener:

filterChain:

filter:

name: 'envoy.filters.network.http_connection_manager'

subFilter:

name: 'envoy.filters.http.router'

patch:

operation: INSERT_BEFORE

value:

name: envoy.filters.http.local_ratelimit

typed_config:

'@type': type.googleapis.com/udpa.type.v1.TypedStruct

type_url: type.googleapis.com/envoy.extensions.filters.http.local_ratelimit.v3.LocalRateLimit

value:

stat_prefix: http_local_rate_limiter

token_bucket:

max_tokens: 50

tokens_per_fill: 10

fill_interval: 120s

filter_enabled:

runtime_key: local_rate_limit_enabled

default_value:

numerator: 100

denominator: HUNDRED

filter_enforced:

runtime_key: local_rate_limit_enforced

default_value:

numerator: 100

denominator: HUNDRED

response_headers_to_add:

- append_action: APPEND_IF_EXISTS_OR_ADD

header:

key: x-rate-limited

value: TOO_MANY_REQUESTS

status:

code: BadRequest

httpbin workload through the ingress gateway. We can do that by creating a Gateway and a VirtualService resource:apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: gateway

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- '*'

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: httpbin

spec:

hosts:

- '*'

gateways:

- gateway

http:

- route:

- destination:

host: httpbin.default.svc.cluster.local

port:

number: 8000

export GATEWAY_IP=$(kubectl get svc -n istio-system istio-ingressgateway -ojsonpath='{.status.loadBalancer.ingress[0].ip}')

curl $GATEWAY_IP/headers

{

"headers": {

"Accept": "*/*",

"Host": "34.168.44.88",

"User-Agent": "curl/7.74.0",

"X-B3-Parentspanid": "9d24ff54dd5b10f1",

"X-B3-Sampled": "1",

"X-B3-Spanid": "f9aa7bf2e4284b6f",

"X-B3-Traceid": "13bb49a9d3af3cb79d24ff54dd5b10f1",

"X-Envoy-Attempt-Count": "1",

"X-Envoy-Internal": "true",

"X-Forwarded-Client-Cert": "By=spiffe://cluster.local/ns/default/sa/httpbin;Hash=d17dc0e96cfdd66c73a0b5664e69a82a8b07dfae92f7386439bff75ed3730ea0;Subject=\"\";URI=spiffe://cluster.local/ns/istio-system/sa/istio-ingressgateway-service-account"

}

}

while true; do curl $GATEWAY_IP/headers; done

$ curl -v $GATEWAY_IP/headers

* Trying 34.168.44.88:80...

* Connected to 34.168.44.88 (34.168.44.88) port 80 (#0)

> GET /headers HTTP/1.1

> Host: 34.168.44.88

> User-Agent: curl/7.74.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 400 Bad Request

< x-rate-limited: TOO_MANY_REQUESTS

< content-length: 18

< content-type: text/plain

< date: Sun, 04 Sep 2022 23:22:18 GMT

< server: istio-envoy

< connection: close

<

* Closing connection 0

local_rate_limited

Rate limiting at the egress gateway

- Deploying a

sleepworkload - you don't necessarily need to do this; we'll use thecurlPod from the previous example - Enabling Envoy access logs. Technically, you don't need to do this to confirm the rate limiter is working, as we'll get a 429 response, but it's helpful to see the logs to make sure traffic is going through the egress gateway)

- Deploying an egress gateway (if you don't have one already)

- Creating the ServiceEntry, Gateway, DestinationRule, and VirtualService resources to route the traffic to the external service (

edition.cnn.com)

...

[2022-09-04T23:28:56.017Z] "GET /politics HTTP/2" 301 - via_upstream - "-" 0 0 21 20 "10.92.1.6" "curl/7.85.0-DEV" "ecad53e2-e6de-929b-8592-94468a55cef7" "edition.cnn.com" "151.101.195.5:80" outbound|80||edition.cnn.com 10.92.2.9:32836 10.92.2.9:8080 10.92.1.6:35122 - -

...

edition.cnn.com), we'll do the same in the rate limiter and configure it to only apply to the edition.cnn.com:80 virtual host. Let's look at the EnvoyFilter:apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: egress-ratelimit

namespace: istio-system

spec:

workloadSelector:

labels:

istio: egressgateway

configPatches:

- applyTo: HTTP_FILTER

match:

context: GATEWAY

listener:

filterChain:

filter:

name: 'envoy.filters.network.http_connection_manager'

patch:

operation: INSERT_BEFORE

value:

name: envoy.filters.http.local_ratelimit

typed_config:

'@type': type.googleapis.com/udpa.type.v1.TypedStruct

type_url: type.googleapis.com/envoy.extensions.filters.http.local_ratelimit.v3.LocalRateLimit

value:

stat_prefix: http_local_rate_limiter

- applyTo: HTTP_ROUTE

match:

context: GATEWAY

routeConfiguration:

vhost:

name: 'edition.cnn.com:80'

route:

action: ANY

patch:

operation: MERGE

value:

typed_per_filter_config:

envoy.filters.http.local_ratelimit:

'@type': type.googleapis.com/udpa.type.v1.TypedStruct

type_url: type.googleapis.com/envoy.extensions.filters.http.local_ratelimit.v3.LocalRateLimit

value:

stat_prefix: http_local_rate_limiter

token_bucket:

max_tokens: 50

tokens_per_fill: 10

fill_interval: 120s

filter_enabled:

runtime_key: local_rate_limit_enabled

default_value:

numerator: 100

denominator: HUNDRED

filter_enforced:

runtime_key: local_rate_limit_enforced

default_value:

numerator: 100

denominator: HUNDRED

response_headers_to_add:

- append_action: APPEND_IF_EXISTS_OR_ADD

header:

key: x-rate-limited

value: TOO_MANY_REQUESTS

status:

code: BadRequest

typed_per_filter_config to the route configuration. This is where we configure the rate limiter for the specific virtual host. Because we need to target a particular virtual host, we'll provide the routeConfigurationin the match section. Inside that, we're targeting a specific virtual host (edition.cnn.com:80) and all routes on that host (action: ANY). Note that we're using the' MERGE' operation because the virtual host and route configuration already exist.curl Pod, and try to send requests to edition.cnn.com:$ curl -v edition.cnn.com

> GET / HTTP/1.1

> User-Agent: curl/7.35.0

> Host: edition.cnn.com

> Accept: */*

>

< HTTP/1.1 400 Bad Request

< x-rate-limited: TOO_MANY_REQUESTS

< content-length: 18

< content-type: text/plain

< date: Sun, 04 Sep 2022 23:42:52 GMT

< server: envoy

< x-envoy-upstream-service-time: 1

<

local_rate_limited

Conclusion

GET requests on /api/v1/posts path, or POST requests on /api/v1/users path, and so on.