Running AI On OCI Ampere A1 Instance

In this article, I will demonstrate easy steps of preparing and launching compute-intensive AI applications on OCI Ampere A1 shape to take advantage of the best-in-class AI inference performance of Ampere Altra processor and Ampere Inference Optimizer (AIO).

Create the free Ampere VM

VM.Standard.A1.Flex shape and has 4 OCPUs and 24 GB of memory.Note

Per the Oracle pricing page, the Oracle CPU (OCPU) unit of measurement provides the CPU capacity equivalent to one physical core of a processor. Hyper-threading enabled CPUs to have a single OCPU corresponding to two hardware execution threads, known as vCPUs. In the case of A1 instances, one OCPU consists of one vCPU.

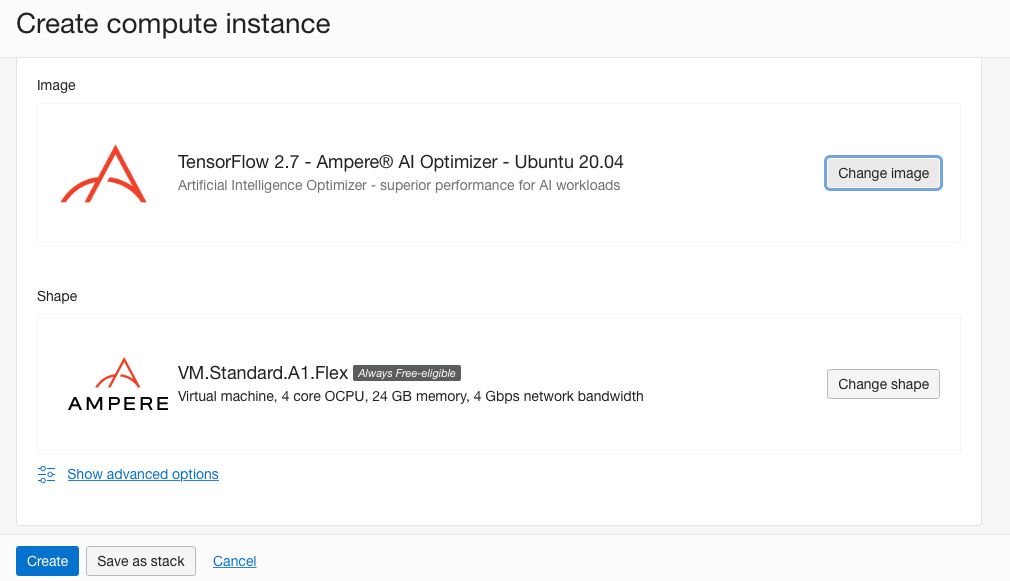

Selecting the image and shape

- Click the Change shape button to select a different VM instance shape. Make sure you have chosen the Virtual machine instance type. You can select the shape series in the next row. We're looking for the Ampere series that contains the ARM-based processors and the shape name called VM.Standard.A1.Flex (it should be the only shape available in this view)

- Select the VM.Standard.A1.Flex shape and adjust the number of OCPUs to 4 and amount of memory to 24 GB. This is what we're getting for free - forever!

- Click the Select shape button to confirm the selection.

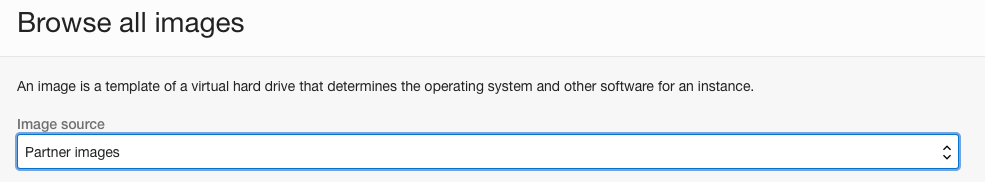

- Click the Change image button. Then let's get the right image for the shape.

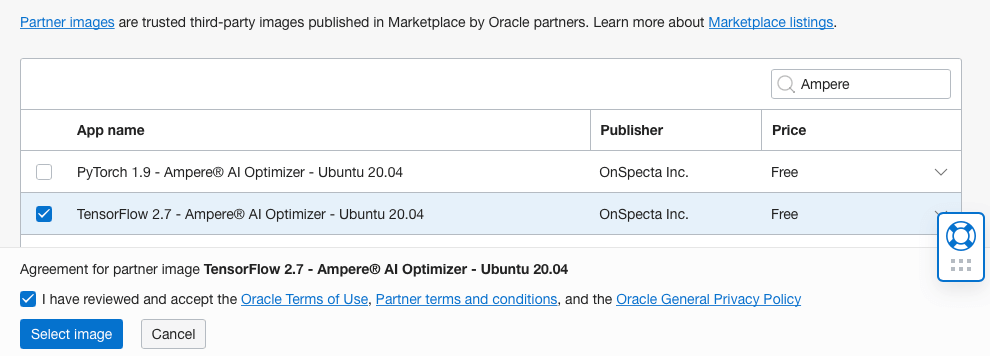

Selecting an image Selecting an image - Select Partner Image in the dropdown menu. Then in the search box, type Ampere. You will see the available images available for the Ampere A1 shape.

Ampere Tensorflow Image Ampere Tensorflow Image - Check the Tensorflow 2.7 – Ampere AI Optimizer – Ubuntu 20.04 box and the following check box to agree to the "Terms of Use". Then, click the Select Image button. You should see the selected shape and image as shown in the figure below.

Tensorflow 2.7 – Ampere AI Optimizer – Ubuntu 20.04 image selected Tensorflow 2.7 – Ampere AI Optimizer – Ubuntu 20.04 image selected

Adding SSH keys

Note

Note: you have to set the SSH key file's permission to400. You can runchmod 400 <keyfile>to do that.

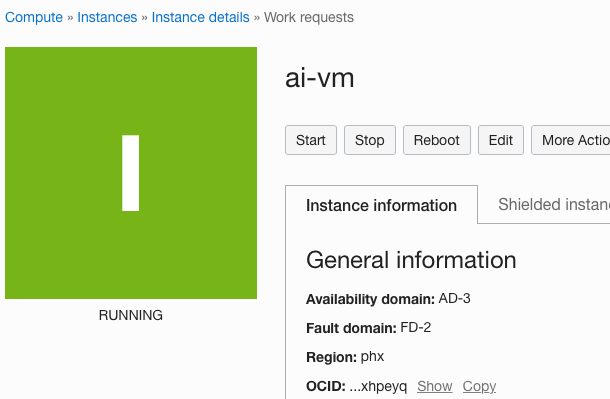

Creating the AI instance

Connecting to the instance

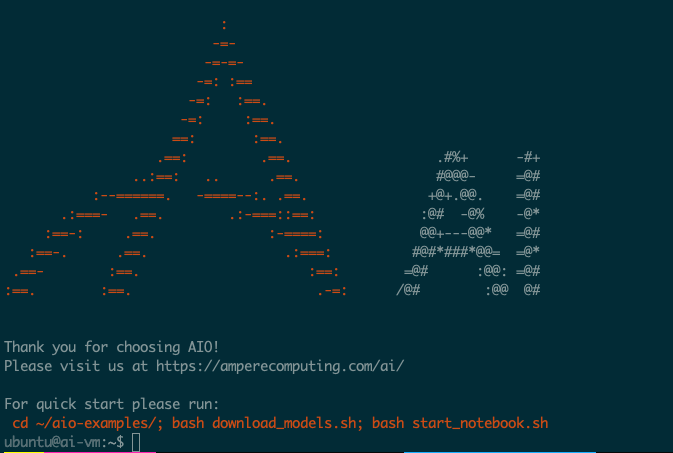

ubuntu username to SSH to the machine.$ ssh -i <keyfile> ubuntu@<public ip address>

sudo reboot and log in again after a minute.Running Tensorflow-AIO examples

$ cd ~/aio-examples

$ ./download_models.sh

Note

Note that it will take a couple of minutes to download all models to the VM.

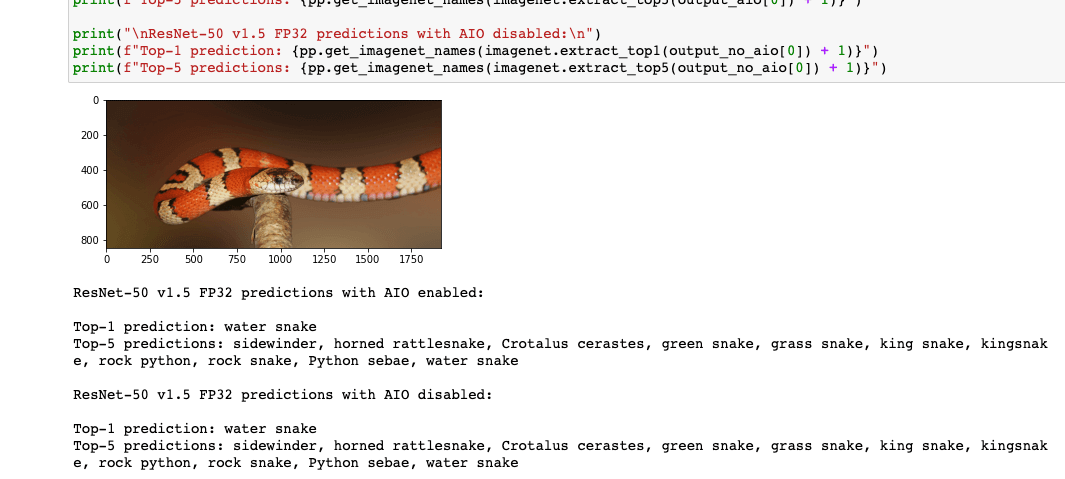

~/aioi-examples and into multiple folders (e.g. classifications, object_detection). Several models are provided that perform some commonly used AI inference computer vision tasks like image classifications and object detections.resnet_50_v15 model:Note

What is the resnet50 model? It's a popular model that's one of the MLCommon benchmark components. You can read more about it here

cd classifications/resnet_50_v15

Note

For the Ampere A1 instance, one OCPU corresponds to one physical Ampere Altra processing core. It is different from AMD (E3/E4) or Intel Standard3 (S3), where one OCPU corresponds to one HyperThread(HT) core (one physical core corresponds to two HT cores).

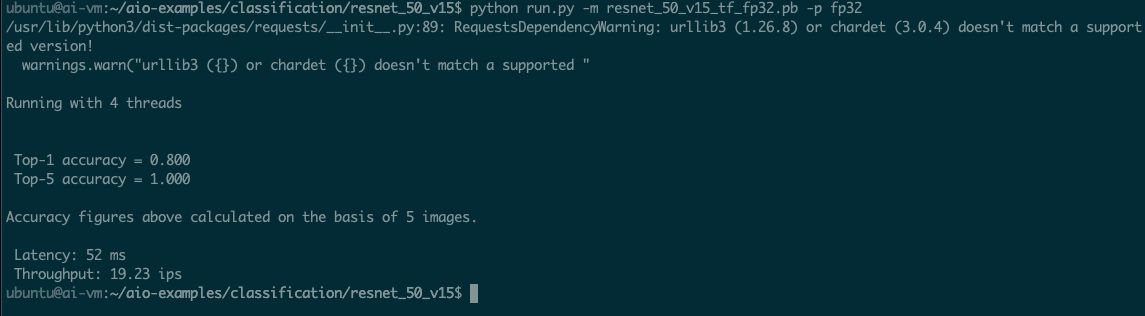

export AIO_NUM_THREADS=4

python run.py -m resnet_50_v15_tf_fp32.pb -p fp32

Note

FP32 or full precision floating-point is a number format that occupies 32-bits in the memory.

resnet_50_v15 can process 19.23 images per second (ips).AIO Running Half Precision (FP16) models

Note

FP16 is a half-precision floating-point computer number format that occupies 16-bits in the memory.

python run.py -m resnet_50_v15_tf_fp16.pb -p fp16

ubuntu@ai-vm:~/aio-examples/classification/resnet_50_v15$ python run.py -m resnet_50_v15_tf_fp16.pb -p fp16

/usr/lib/python3/dist-packages/requests/__init__.py:89: RequestsDependencyWarning: urllib3 (1.26.8) or chardet (3.0.4) doesn't match a supported version!

warnings.warn("urllib3 ({}) or chardet ({}) doesn't match a supported "

Running with 4 threads

Top-1 accuracy = 0.800

Top-5 accuracy = 1.000

Accuracy figures above calculated on the basis of 5 images.

Latency: 28 ms

Throughput: 36.29 ips

Comparing the numbers with AMD and Intel Instances (4 vCPUs)

FP32 resnet_50_v15 model:| OCI Shape | $ / hr | Images per second | Performance (ips) / price |

|---|---|---|---|

| Ampere A1 | 0.04 | 19.23 | 480 |

| AMD E4 | 0.05 | 15.43 | 308 |

| Intel Standard3 | 0.08 | 18.24 | 228 |

FP16 resnet_50_v15 model:| OCI Shape | $ / hr | Images per second | Performance (ips) / price |

|---|---|---|---|

| Ampere A1 | 0.04 | 36.29 | 907 |

resnet_50_v15 FP32 model delivers over 1.5x performance over AMD's best E4 instance. It also offers over 2x performance over Intel's Standard3 instance.A visual example with Jupyter Notebook

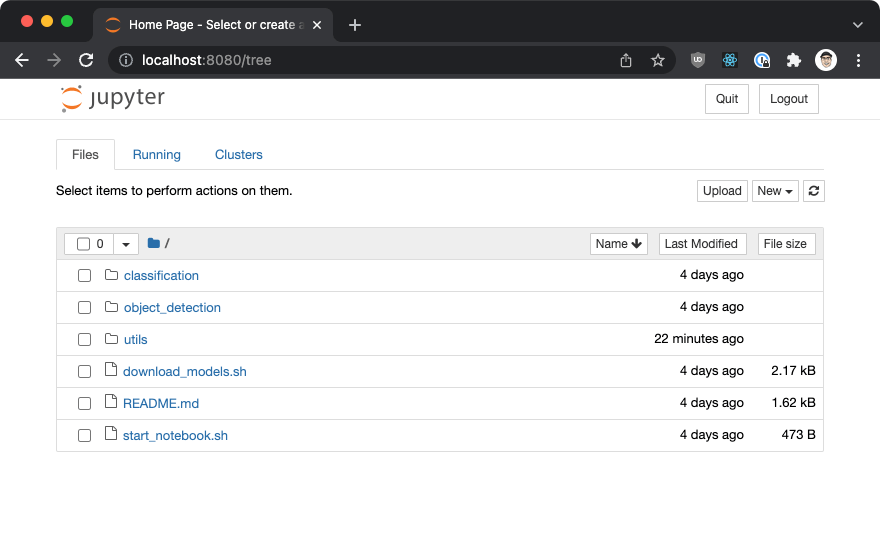

cd ~/.aio-examples

./start_notebook.sh

buntu@ai-vm:~/aio-examples$ ./start_notebook.sh

On your local system please open a new terminal window and run:

ssh -N -L 8080:localhost:8080 -i ./your_key.key your_user@xxx.xxx.xxx.xxx

After that open one of the links printed out below in your local browser

[I 23:08:01.841 NotebookApp] Writing notebook server cookie secret to /home/ubuntu/.local/share/jupyter/runtime/notebook_cookie_secret

[I 23:08:02.270 NotebookApp] Serving notebooks from local directory: /home/ubuntu/aio-examples

[I 23:08:02.270 NotebookApp] Jupyter Notebook 6.4.8 is running at:

[I 23:08:02.270 NotebookApp] http://localhost:8080/?token=fd98a68431793485bb9dbf8590ad6f571dbabbfa96757b37

[I 23:08:02.270 NotebookApp] or http://127.0.0.1:8080/?token=fd98a68431793485bb9dbf8590ad6f571dbabbfa96757b37

[I 23:08:02.270 NotebookApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation).

[C 23:08:02.274 NotebookApp]

To access the notebook, open this file in a browser:

file:///home/ubuntu/.local/share/jupyter/runtime/nbserver-1367-open.html

Or copy and paste one of these URLs:

http://localhost:8080/?token=fd98a68431793485bb9dbf8590ad6f571dbabbfa96757b37

or http://127.0.0.1:8080/?token=fd98a68431793485bb9dbf8590ad6f571dbabbfa96757b37

ssh -N -L 8080:localhost:8080 -i ./your_key.key your_user@xxx.xxx.xxx.xxx

http://localhost:8080/?token=....).

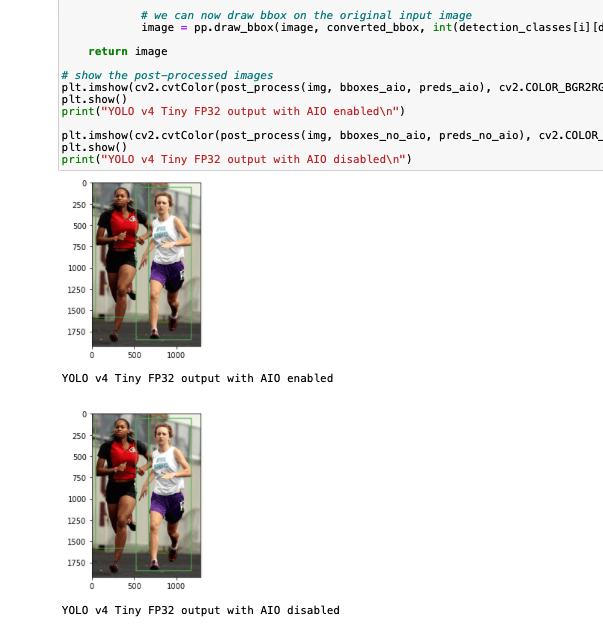

object_detection folder. Double-click the folder name, then click the examples.ipynb project.

classifications folder and then the examples.ipynb project.