Creating a Kubernetes cluster in Google Cloud (LAB)

In this first lab in the series of Kubernetes labs, we'll learn how to create a Kubernetes cluster in Google Cloud

Creating a Project

- Go to the Cloud Resource Manager and click the Create Project button

- Select the name of your project, billing account, and location and click Create

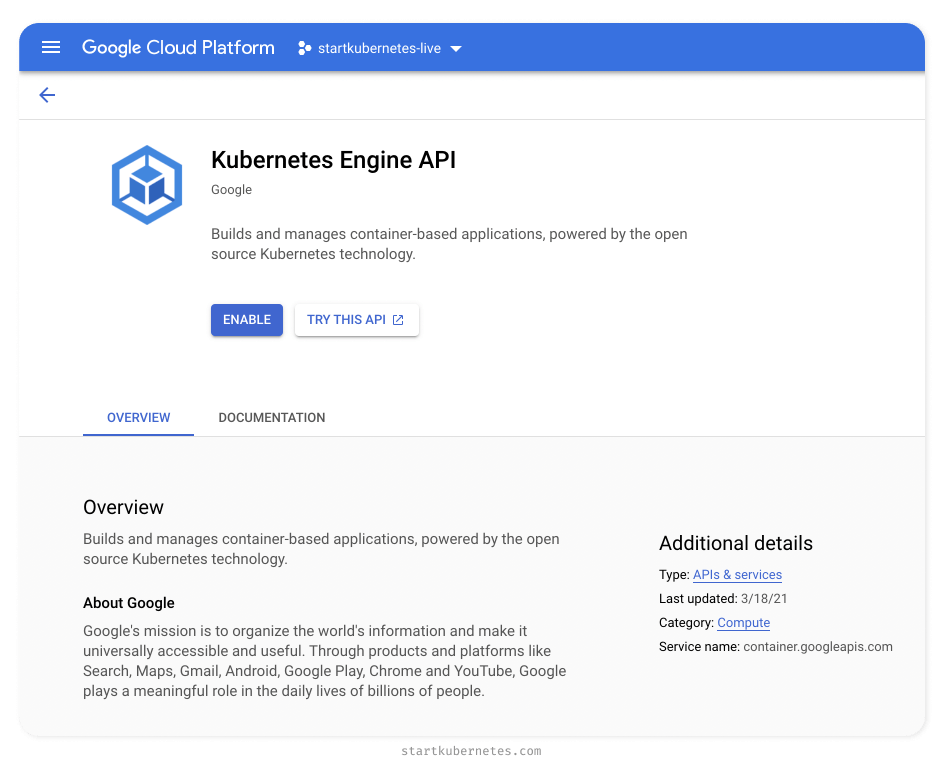

Enable Kubernetes API

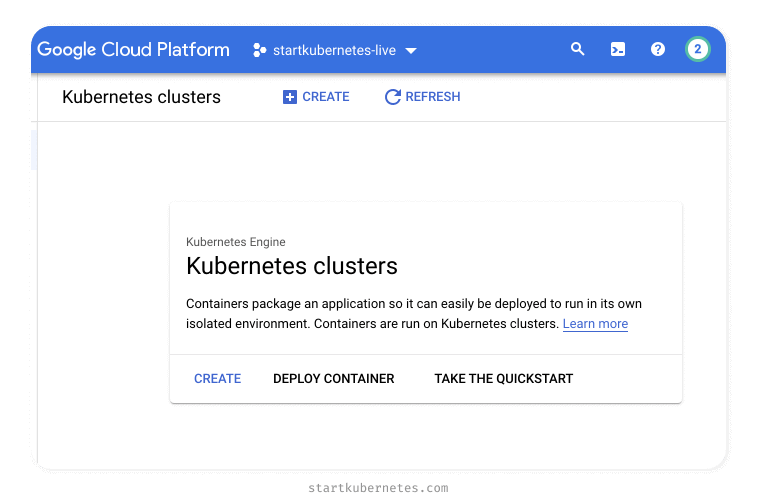

Create a Kubernetes cluster

- Click the Create button.

- Click the Configure button next to the Standard option.

Note

What does the Autopilot option give us? The Autopilot mode will provision and manage the cluster infrastructure (nodes, node pools) for you. It pre-configures and optimizes the cluster for production. You also pay per resource requested by individual Pod vs. paying for individual nodes in the Standard mode. There are some unsupported features and a couple of other differences between the two modes. You can see the full comparison here.

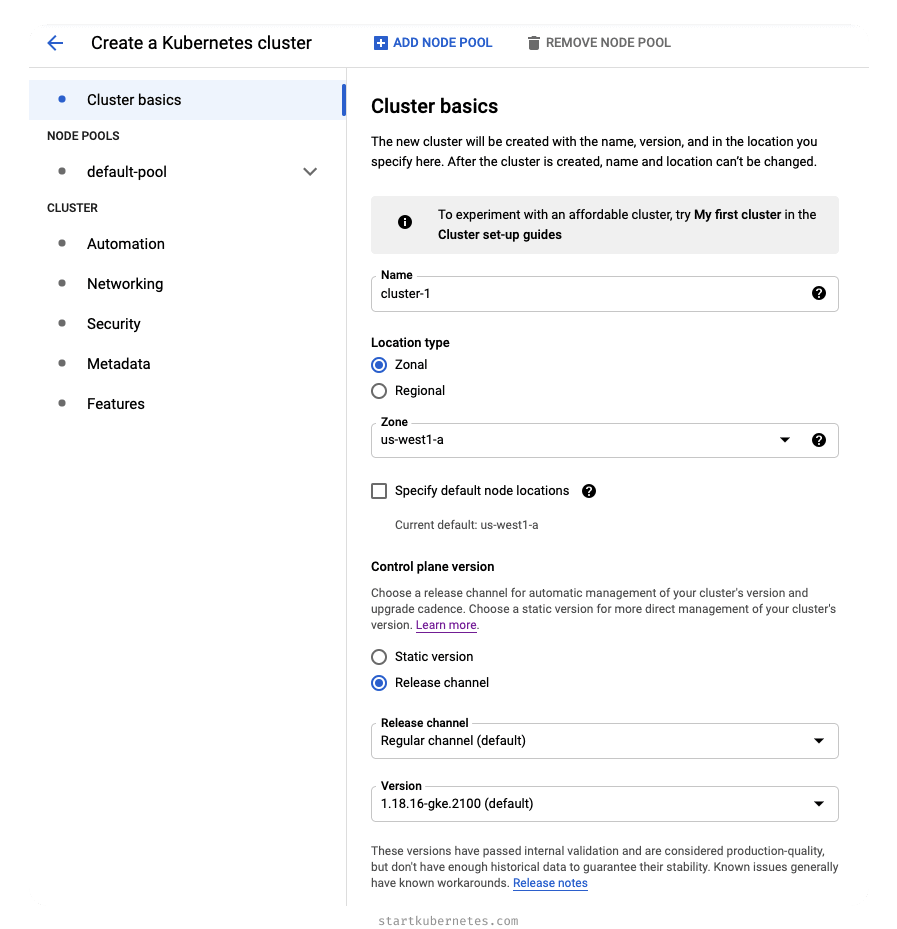

Cluster Basics

- For the name, we can leave it as

cluster-1or pick your name. - From the location type, select the Zonal option and the region that's closest to you.

- For the Release channel, we will leave the defaults - Regular channel and 1.18.16-gke.2100

Note

Zonal vs. regional? If we were creating a production-type cluster, we'd select the Regional option. GKE replicates the cluster control plane (master node) and the worker nodes across multiple zones within a region with the regional option. So even if there's an outage within a single zone in a region, your cluster would keep running (assuming not all zones go down).

Node pool

default-pool option under the Node Pools section from the left sidebar. On this page, we can define how our node pools will look like. A node pool is just a template for how the worker nodes get created for this cluster.Nodes

default-pool in our case). Now, you can see how the grouping using the node pools allows us to create different types of nodes in the cluster. For example, we could create separate node pools with more powerful machines or pools that can autoscale, be in different zones, have different networking settings, etc.Note

Notice the REST and COMMAND LINE links at the bottom? We could create the same cluster through the API or using the gcloud CLI. Clicking the COMMAND LINE or the REST link will give us the full command to run.

Accessing the cluster

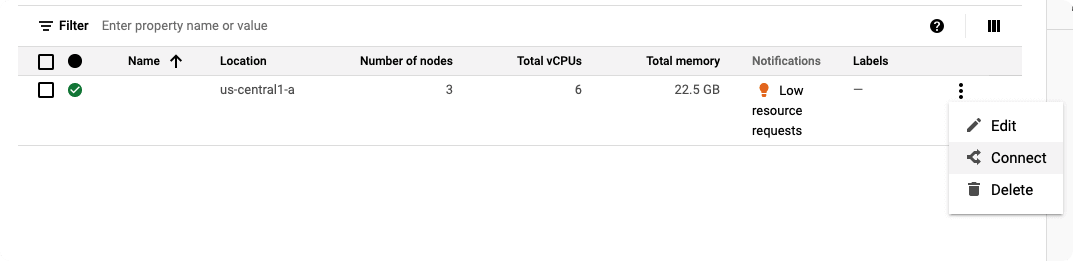

- Click the vertical ellipsis (⋮) next to the Kubernetes cluster in the list.

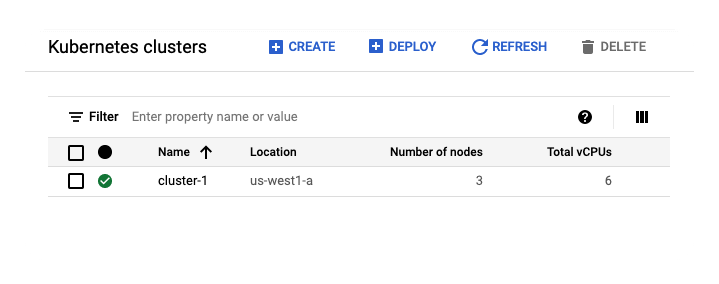

Cluster list - Select the Connect option.

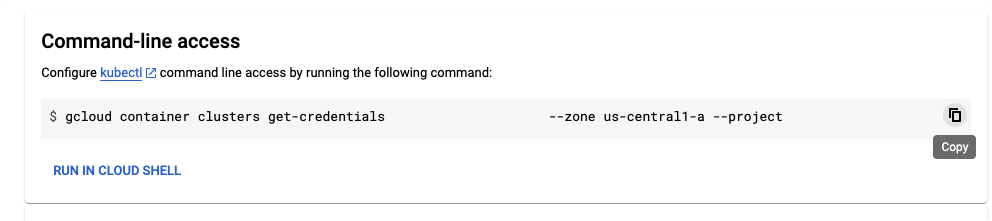

- From the Command-line access section, click the RUN IN CLOUD SHELL link.

Command-line access - The cloud shell window will open, and the gcloud command to connect to the cluster will be pasted into the terminal window. Press Enter to run the command.

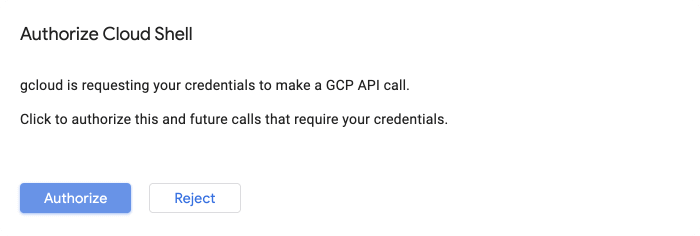

- You will be prompted to authorize cloud shell, click Authorize to continue.

Authorize

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

gk3-autopilot-cluster-1-default-pool-0cb291ed-kkdt Ready <none> 7m11s v1.18.17-gke.100

gk3-autopilot-cluster-1-default-pool-cdd16c8b-9b78 Ready <none> 7m25s v1.18.17-gke.100

Exploring the cluster

kubectl.kubectl get nodes earlier to list the worker nodes in the cluster.get command is a very common command that you will frequently use when working with Kubernetes. As the name suggests, get will display one or more resources.kubectl get, you'll get an error saying that you have to specify a type of resource to get.$ kubectl get

You must specify the type of resource to get. Use "kubectl api-resources" for a complete list of supported resources.

kubectl api-resources command to get a complete list of supported resources.kubectl api-resources command. A quick warning - the list will be long. There are many resources, names that might look unfamiliar, and perhaps some familiar names (namespaces, nodes?).- Name (e.g.

bindings,nodes,namespaces,pods, …) - Optional short name (

nsfor namespaces, `po' for pods, …) - API version (

v1,apps/v1,extensions/v1beta1, …) - Value indicating whether a resource is namespaced (

trueorfalse) - Kind which represents the kind or type of the resource (e.g.

Namespace,Pod,Secret)

Namespaces

kubectl get namespaces:$ kubectl get namespaces

NAME STATUS AGE

default Active 38m

gatekeeper-system Active 37m

kube-node-lease Active 38m

kube-public Active 38m

kube-system Active 38m

ns - this means that you can use this short name instead of the long name. The command kubectl get ns is equivalent to the above command.kube- are reserved for Kubernetes, and you usually don't create any resources in those namespaces, nor should you name your own namespaces with the kube- prefix.Note

The gatekeeper-system namespace is specific to the cloud vendor (GKE), and it's an admission controller that validates requests to create and update Pods on the cluster using the Open Policy Agent (OPA).

default namespace is just what the name suggests. It's the namespace where namespaced resources will be created if you don't explicitly set the namespace. For example, if we'd create a Pod without specifying a namespace, it would get created in the default namespace. However, we could also provide a specific namespace we want the Pod to be created in either by specifying the namespace in the kubectl command or in the metadata section of the resource definition (YAML).Describing resources

describe.describe command, we can get more details of any resource or a group of resources in the cluster.kube-system namespace. We will use the word describe, followed by the resource type (namespace) and the actual resource name (kube-system):$ kubectl describe namespace kube-system

Name: kube-system

Labels: <none>

Annotations: <none>

Status: Active

Resource Quotas

Name: gcp-critical-pods

Resource Used Hard

---

pods 19 1G

Name: gke-resource-quotas

Resource Used Hard

---

count/ingresses.extensions 0 100

count/ingresses.networking.k8s.io 0 100

count/jobs.batch 0 5k

pods 21 1500

services 4 500

No LimitRange resource.

get command. The output shows the resource details such as name, labels, annotations, status, and resource quotas. Note that the output of the describe command will depend on the resource we are describing. For example, when describing pods, the output will contain the list of events, volumes, containers, IPs, and other relevant data relevant to Pods.