Exploring Kubernetes Volumes

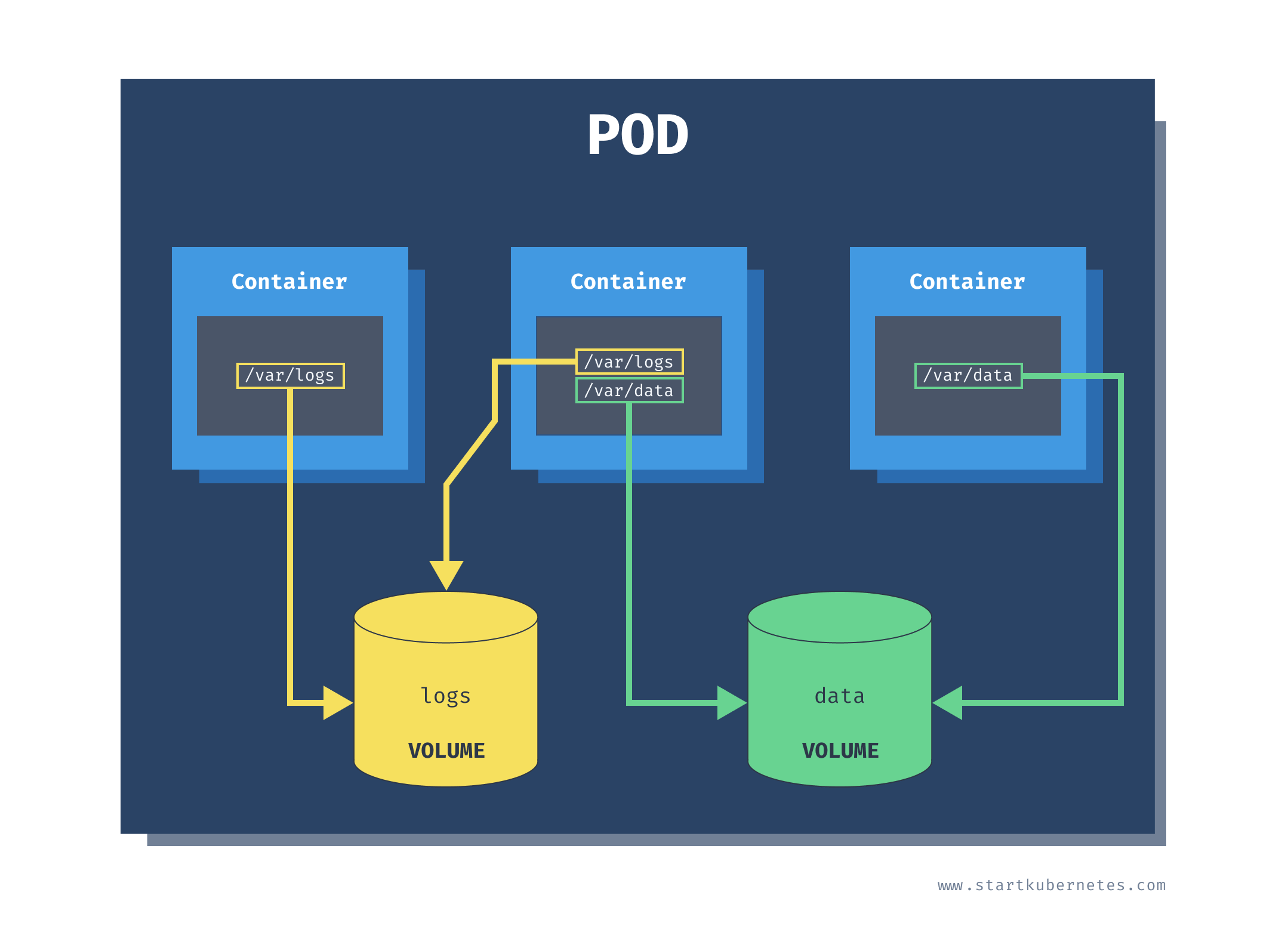

Running stateful workloads inside Kubernetes is different from running stateless services. The reason being is that the containers and Pods can get created and destroyed at any time. If any of the cluster nodes go down or a new node appears, Kubernetes needs to reschedule the Pods. When Pods get rescheduled, what happens with data? This article talks about Kubernetes Volumes and how to use them to store the data outside of your containers.

emptyDir). When you create a Volume with the emptyDir type, Kubernetes creates it when it assigns a Pod to a node. The Volume exists for as long as the Pod is running. As the name suggests, it is initially empty, but the containers can write and read from the Volume. Once you delete the Pod, Kubernetes deletes the Volume as well.emptyDir in our case). The second part is mounting the Volume inside of the containers using the volumeMounts key. In each Pod you can use multiple different Volumes at the same time.pod-storage) and specifying which path we want to mount the Volume under (/data/).Note

Check out Getting started with Kubernetes to get set up your cluster and run through the examples in this post.

apiVersion: v1

kind: Pod

metadata:

name: empty-dir-pod

spec:

containers:

- name: alpine

image: alpine

args:

- sleep

- '120'

volumeMounts:

- name: pod-storage

mountPath: /data/

volumes:

- name: pod-storage

emptyDir: {}

empty-dir-pod.yaml and run kubectl apply -f empty-dir.pod.yaml to create the Pod.kubectl exec command to get a terminal inside the container:Note

Check out "Kubernetes CLI (kubectl) tips you didn't know about" to learn more about thekubectlcommand.

```text

$ kubectl exec -it empty-dir-pod -- /bin/sh

/ # ls

bin dev home media opt root sbin sys usr

data etc lib mnt proc run srv tmp var

ls inside the container, you will notice the data folder. The data folder is mounted from the pod-storage Volume defined in the YAML.data folder and wait for the container to restart (after 2 minutes) to prove that the data inside the data folder stays around.hello.txt file under the data folder:echo "hello" >> data/hello.txt

exit to exit the container. If you wait for 2 minutes, the container will automatically restart. To watch the container restart, run the kubectl get po -w command from a separate terminal window.data/hello.txt is still in the container:$ kubectl exec -it empty-dir-pod -- /bin/sh

/ # ls data/hello.txt

data/hello.txt

/ # cat data/hello.txt

hello

/ #

/var/lib/kubelet/pods folder. That folder contains a list of pod IDs, and inside each of those folders is the volumes. For example, here's how you can get the pod ID:$ kubectl get po empty-dir-pod -o yaml | grep uid

uid: 683533c0-34e1-4888-9b5f-4745bb6edced

minikube ssh to get a terminal inside the host Minikube uses to run Kubernetes. Once inside the host, you can find the hello.txt in the following folder:$ sudo cat /var/lib/kubelet/pods/683533c0-34e1-4888-9b5f-4745bb6edced/volumes/kubernetes.io~empty-dir/pod-storage/hello.txt

hello

nsenter run a shell inside all namespace of the process with id 1:$ docker run -it --privileged --pid=host debian nsenter -t 1 -m -u -n -i sh

/ #

/var/lib/kubelet/pods folder and find the hello.txt just like you would if you're using Minikube.emtpyDir or hostPath (used for mounting folders from the nodes' filesystem). Other types are either used for cloud-provider storage (such as azureFile, awsElasticBlockStore, or gcePersistentDisk), network storage (cephfs, cinder, csi, flocker, ...), or for mounting Kubernetes resources into the Pods (configMap, secret).azureFile or awsElasticBlockStore), the data will still be persisted. The persistent volume and persistent volume claims are just a way to abstract how Kubernetes provisions the storage.